Skepticism Surrounds Feasibility of AGI in Modern AI Models

AGI Skepticism and Modern AI Models

Artificial General Intelligence (AGI) remains a highly debated topic in the AI community, with significant skepticism surrounding its feasibility and the claims made by modern AI models. Here’s a breakdown of the key points from the relevant sources:

1. AGI is Far from Inevitable

Researchers at Radboud University and other institutions argue that AGI is not achievable, even under ideal conditions. In their paper published in Computational Brain & Behavior, they explain that replicating human cognition through AI is impossible due to the limitations of computing power and natural resources. Iris van Rooij, lead author of the paper, emphasizes that the hype around AGI is overblown and that chasing this goal is a "fool’s errand."

Olivia Guest, co-author, adds that even with perfect datasets and advanced machine learning methods, AGI cannot replicate the seamless cognitive abilities of the human brain. For example, humans can recall information from years ago effortlessly, a capability that AI cannot match due to computational constraints.

2. Unscientific AGI Performance Claims

A position paper on arXiv calls for the academic community to stop making unscientific claims about AGI. The authors argue that the current AI culture, driven by financial incentives and hype, leads to overinterpretation of model capabilities. They highlight that finding meaningful patterns in latent spaces of models does not equate to evidence of AGI. For instance, large language models (LLMs) like ChatGPT may appear intelligent, but their performance is often based on memorization rather than true understanding.

The paper also warns against anthropomorphizing AI systems, as humans are prone to attributing human-like qualities to non-human entities. This tendency, combined with the pressure to publish groundbreaking results, creates a "perfect storm" for inflated AGI claims.

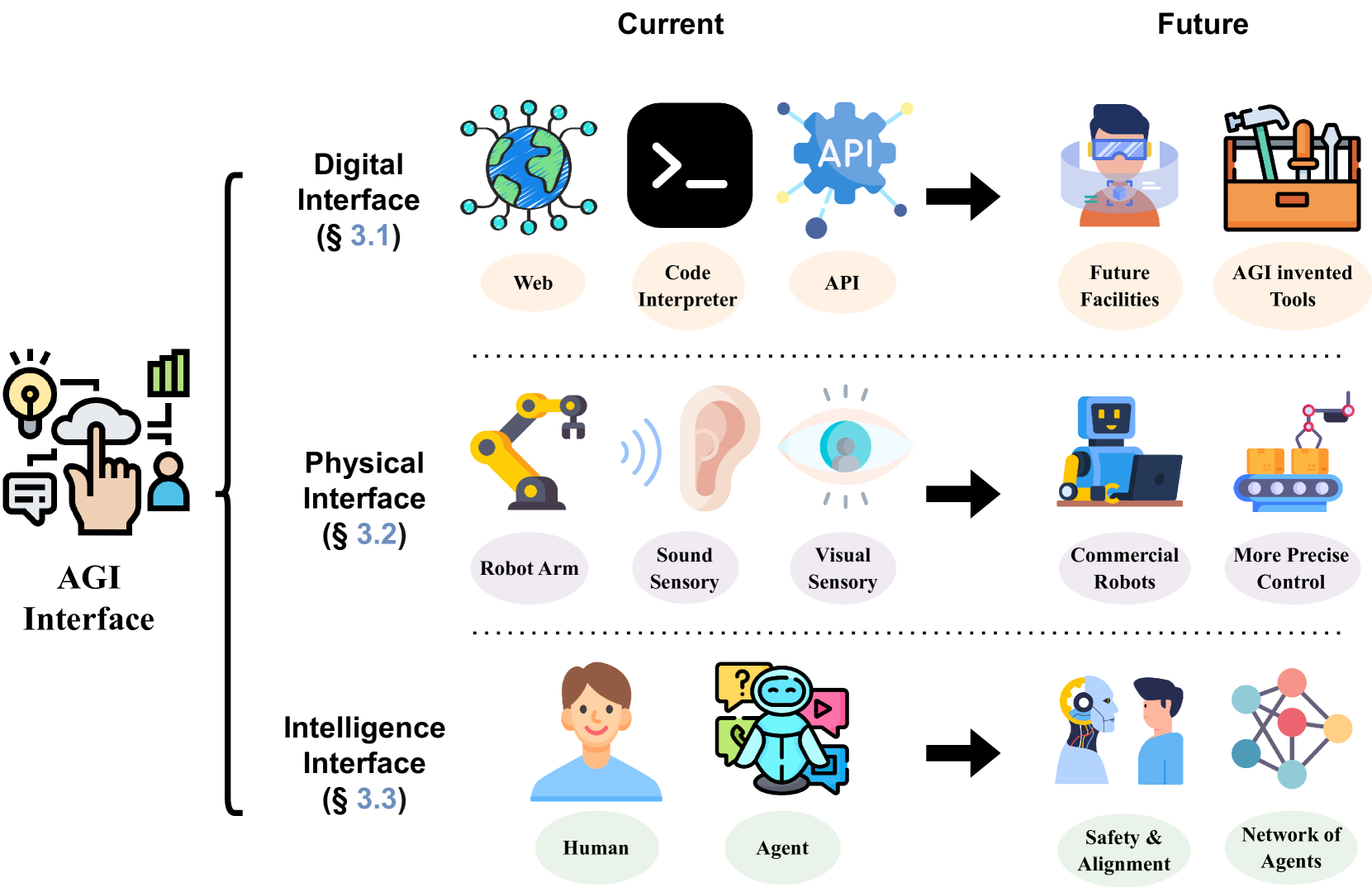

3. The Gap Between Narrow AI and AGI

Modern AI models, such as those developed by OpenAI and Google DeepMind, excel at specific tasks (narrow AI) but fall short of achieving AGI. For example, AlphaGeometry, a model by DeepMind, can solve complex geometry problems but does not demonstrate general intelligence. The authors of the arXiv paper argue that such models are powerful tools but are far from AGI, as they lack the ability to generalize across diverse tasks without human intervention.

4. The Need for Critical AI Literacy

Both sources emphasize the importance of critical AI literacy to counter the hype surrounding AGI. Iris van Rooij and her colleagues advocate for a better understanding of AI systems to help the public evaluate the claims made by tech companies. Similarly, the arXiv paper calls for researchers to exercise caution and adhere to principles of academic integrity when interpreting and communicating AI research outcomes.

Conclusion

While modern AI models have made significant advancements, skepticism about AGI remains strong. The consensus among researchers is that AGI is not achievable with current technology, and the claims made by big tech companies are often exaggerated. Critical AI literacy and rigorous scientific scrutiny are essential to separate hype from reality in the field of AI.