Apache Airflow 3.0 Enhances Event-Driven Data Orchestration

Apache Airflow 3.0 Event-Driven Data Orchestration

Apache Airflow 3.0 continues to enhance its capabilities for event-driven data orchestration, building on the robust features introduced in earlier versions. Event-driven workflows in Airflow are designed to react to specific events or triggers, enabling real-time data processing and automation. Here’s an overview of how Airflow 3.0 supports event-driven data orchestration:

Key Features of Event-Driven Orchestration in Airflow 3.0

- Dynamic DAGs: Airflow 3.0 allows for the creation of dynamic Directed Acyclic Graphs (DAGs) that can be triggered by external events. This enables workflows to adapt to real-time data changes and events.

- Event Triggers: Airflow 3.0 supports event triggers that can initiate workflows based on specific conditions or events, such as the arrival of new data in a Kafka topic or a change in a database.

- Integration with Kafka: Airflow 3.0 seamlessly integrates with Apache Kafka, allowing for the consumption of event streams and the triggering of workflows based on these events. This is particularly useful for real-time data processing and event-driven architectures.

- Custom Operators and Sensors: Airflow 3.0 provides a wide range of custom operators and sensors that can be used to interact with various systems and technologies, enabling complex event-driven workflows.

- Monitoring and Logging: Airflow 3.0 offers advanced monitoring and logging capabilities, allowing users to track the progress of event-driven workflows, diagnose issues, and optimize performance.

Architecture of Event-Driven Workflows in Airflow 3.0

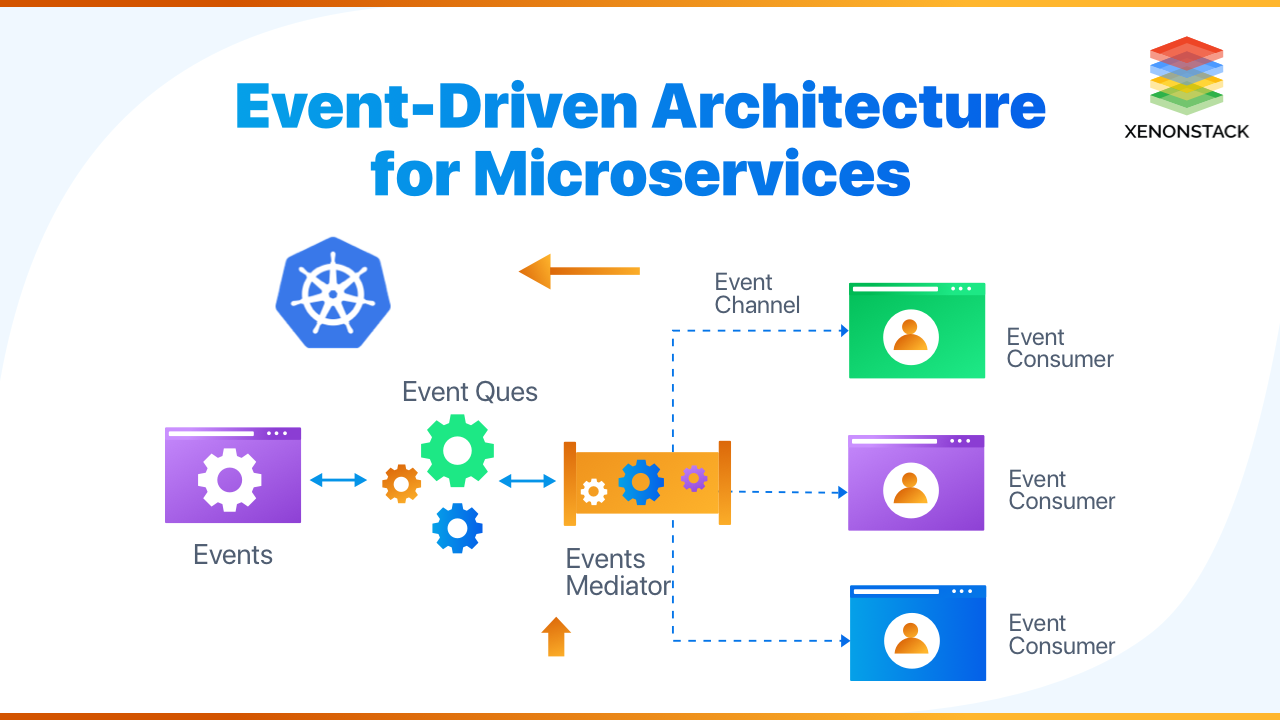

In a typical event-driven architecture using Airflow 3.0, events are produced to a Kafka topic by an external system or user. A Kafka consumer (dispatcher) reads these events and triggers the appropriate DAG in Airflow. The DAG is parameterized to process one event at a time, providing granular observability and control. This architecture is particularly suited for low-concurrency scenarios where detailed execution-level observability is required.

Example Use Case: Sending Email Alerts

Consider an example where an event-driven workflow is used to send email alerts. When a specific event (e.g., a new order) is detected, a command is sent to a Kafka topic. A Kafka consumer reads this command and triggers an Airflow DAG that sends an email alert. The DAG is defined with an EmailOperator, and the recipient, subject, and body of the email are passed as parameters from the event.

Conclusion

Apache Airflow 3.0 provides powerful tools for event-driven data orchestration, enabling real-time data processing and automation. By integrating with technologies like Apache Kafka and offering dynamic DAGs, event triggers, and custom operators, Airflow 3.0 is well-suited for building complex, event-driven workflows in modern data architectures.