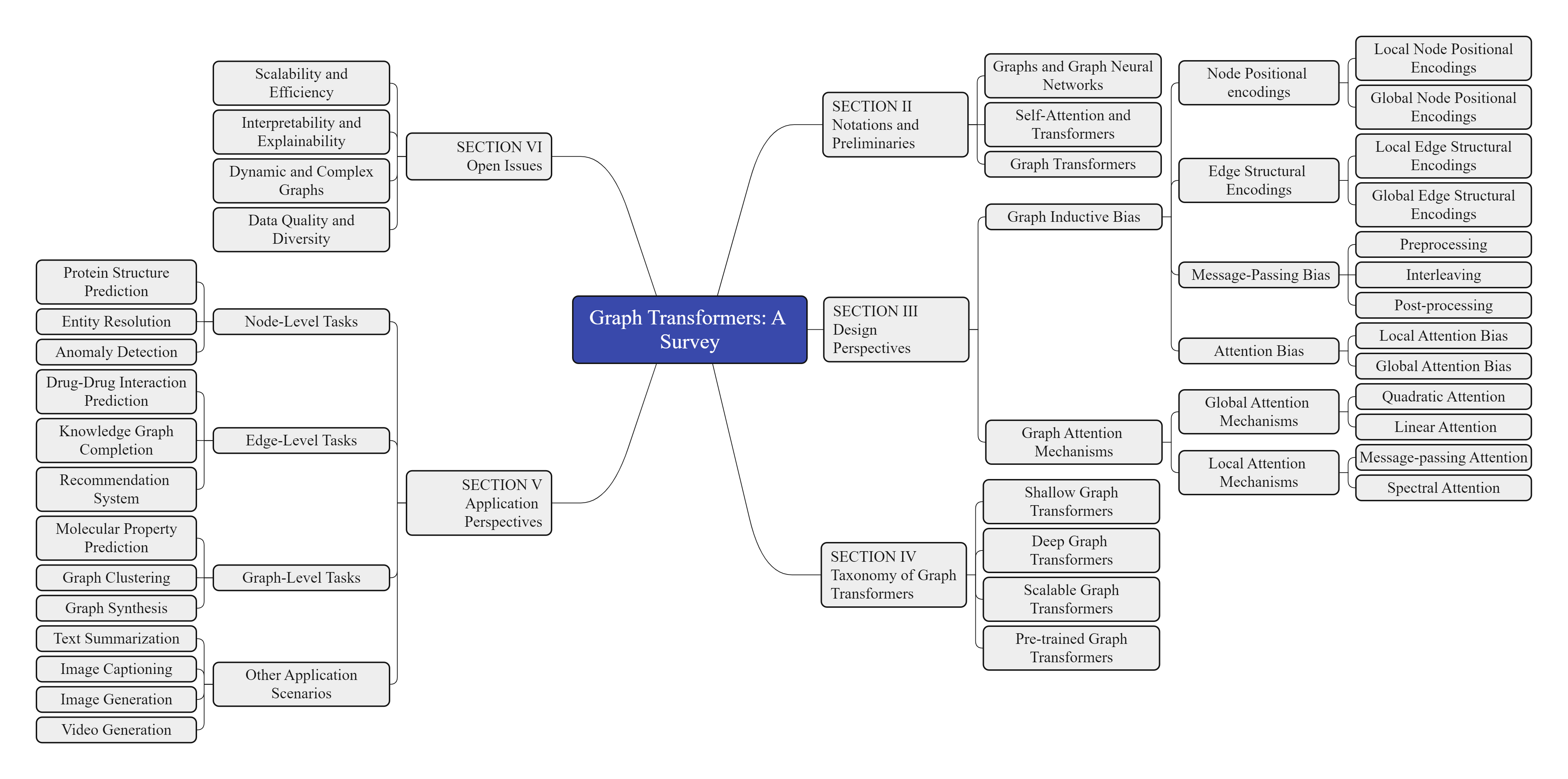

Graph Transformers: Revolutionizing Graph-Structured Data Processing

Graph Transformers: An Overview

Graph Transformers (GTs) are a cutting-edge class of neural network models designed to handle graph-structured data. They combine the strengths of transformers, which have revolutionized natural language processing (NLP) and computer vision (CV), with the capabilities of Graph Neural Networks (GNNs) to process and learn from graph data effectively.

Key Concepts

- Graphs: Graphs are data structures consisting of nodes (vertices) and edges (links) that connect pairs of nodes. They are widely used to represent complex data in domains like social networks, biology, chemistry, and transportation systems.

- Transformers: Transformers are neural network models that use self-attention mechanisms to process input sequences. They excel at capturing long-range dependencies and global context, making them highly effective in NLP and CV tasks.

- Graph Transformers: Graph Transformers integrate the self-attention mechanism of transformers with graph-specific inductive biases, allowing them to process graph-structured data efficiently. They can handle both node and edge features, making them versatile for various graph-related tasks.

How Graph Transformers Work

Graph Transformers leverage the self-attention mechanism to process graph data. Here’s a simplified breakdown of their operation:

- Self-Attention: The model computes attention scores between nodes based on their features and relationships. This allows the model to focus on the most relevant parts of the graph.

- Message Passing: Information is propagated across the graph by aggregating features from neighboring nodes, similar to traditional GNNs.

- Graph Inductive Bias: Graph Transformers incorporate prior knowledge about graph properties, such as node connectivity and edge weights, to enhance their performance.

Applications of Graph Transformers

Graph Transformers have been successfully applied to a wide range of tasks, including:

- Node-Level Tasks: Predicting properties of individual nodes, such as classification or regression tasks.

- Edge-Level Tasks: Predicting relationships or interactions between nodes, such as link prediction.

- Graph-Level Tasks: Predicting properties of entire graphs, such as graph classification or generation.

Challenges and Future Directions

Despite their success, Graph Transformers face several challenges, including:

- Scalability: Processing large-scale graphs efficiently remains a significant challenge.

- Generalization: Ensuring that models generalize well to unseen graphs and tasks.

- Interpretability: Making the decision-making process of Graph Transformers more transparent and understandable.

For more detailed insights, you can refer to the Understanding Graph Transformers by Generalized Propagation paper or the Graph Transformers: A Survey on arXiv.