Logic-LM: Enhancing Logical Reasoning in Large Language Models with Symbolic Solvers

Logic-LM: Enhancing Logical Reasoning in Large Language Models with Symbolic Solvers

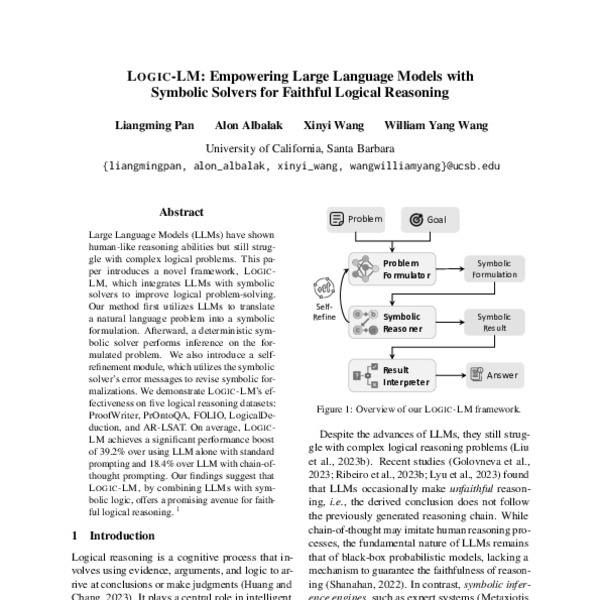

Logic-LM is a novel framework designed to enhance the logical reasoning capabilities of Large Language Models (LLMs) by integrating them with symbolic solvers. This approach addresses the limitations of LLMs in handling complex logical problems, despite their human-like reasoning abilities.

The Logic-LM framework operates in two main steps:

- Translation: The LLM translates a natural language problem into a symbolic formulation.

- Inference: A deterministic symbolic solver performs inference on the formulated problem.

Additionally, Logic-LM includes a self-refinement module that uses error messages from the symbolic solver to revise and improve the symbolic formalizations. This iterative process enhances the accuracy and reliability of the logical reasoning.

Logic-LM has been tested on five logical reasoning datasets: ProofWriter, PrOntoQA, FOLIO, LogicalDeduction, and AR-LSAT. The results show significant performance improvements:

- 39.2% improvement over using LLM alone with standard prompting.

- 18.4% improvement over LLM with chain-of-thought prompting.

These findings suggest that combining LLMs with symbolic logic offers a promising avenue for achieving more faithful and accurate logical reasoning. For more detailed information, you can refer to the original paper.