Implementing Neural Network Backpropagation in Python

Neural Network Backpropagation Example in Python

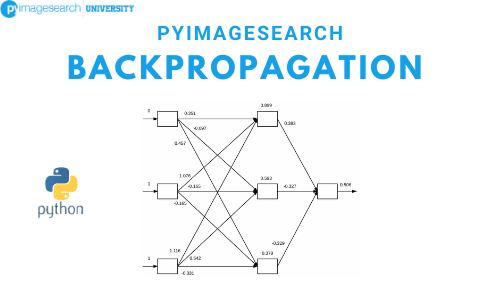

Backpropagation is a fundamental algorithm in training neural networks. It involves adjusting the weights of the network to minimize the error between the predicted and actual outputs. Below is an example of how to implement a neural network with backpropagation in Python.

Example: Implementing Backpropagation from Scratch

Here is a step-by-step guide to implementing a simple neural network with backpropagation using Python:

1. Initialize the Network

import numpy as np

class NeuralNetwork:

def __init__(self, layers, alpha=0.1):

self.W = []

self.layers = layers

self.alpha = alpha

for i in np.arange(0, len(layers) - 2):

w = np.random.randn(layers[i] + 1, layers[i + 1] + 1)

self.W.append(w / np.sqrt(layers[i]))

w = np.random.randn(layers[-2] + 1, layers[-1])

self.W.append(w / np.sqrt(layers[-2]))

2. Define the Activation Function

The sigmoid function is commonly used as the activation function in neural networks:

def sigmoid(self, x):

return 1.0 / (1 + np.exp(-x))

def sigmoid_deriv(self, x):

return x * (1 - x)

3. Forward Propagation

Forward propagation involves passing the input through the network to get the output:

def fit_partial(self, x, y):

A = [np.atleast_2d(x)]

for layer in np.arange(0, len(self.W)):

net = A[layer].dot(self.W[layer])

out = self.sigmoid(net)

A.append(out)

4. Backward Propagation

Backward propagation involves calculating the error and updating the weights:

error = A[-1] - y

D = [error * self.sigmoid_deriv(A[-1])]

for layer in np.arange(len(A) - 2, 0, -1):

delta = D[-1].dot(self.W[layer].T)

delta = delta * self.sigmoid_deriv(A[layer])

D.append(delta)

D = D[::-1]

for layer in np.arange(0, len(self.W)):

self.W[layer] += -self.alpha * A[layer].T.dot(D[layer])

5. Training the Network

Finally, train the network using the backpropagation algorithm:

def fit(self, X, y, epochs=1000, displayUpdate=100):

X = np.c_[X, np.ones((X.shape[0]))]

for epoch in np.arange(0, epochs):

for (x, target) in zip(X, y):

self.fit_partial(x, target)

if epoch == 0 or (epoch + 1) % displayUpdate == 0:

loss = self.calculate_loss(X, y)

print(f"[INFO] epoch={epoch + 1}, loss={loss:.7f}")

6. Testing the Network

After training, you can use the network to make predictions:

def predict(self, X):

X = np.c_[X, np.ones((X.shape[0]))]

A = [np.atleast_2d(X)]

for layer in np.arange(0, len(self.W)):

net = A[layer].dot(self.W[layer])

out = self.sigmoid(net)

A.append(out)

return A[-1]

Conclusion

This example demonstrates how to implement a neural network with backpropagation in Python. By following these steps, you can build and train a simple neural network to solve various machine learning problems.