Mem0: A Scalable Memory Architecture for AI Context Conversations

Scalable Memory for AI Context Conversations: Mem0

Scalable memory architectures are crucial for enabling AI systems to maintain context and coherence across long-term conversations. One such innovative solution is Mem0, a memory-centric architecture designed to address the limitations of Large Language Models (LLMs) in retaining information beyond fixed context windows.

Challenges with Traditional LLMs

LLMs, while adept at generating contextually coherent responses, struggle with maintaining consistency over prolonged multi-session dialogues. This is primarily due to their reliance on fixed context windows, which limit their ability to persist information across sessions. This limitation is particularly problematic in applications requiring long-term engagement, such as personal assistance, health management, and tutoring.

Mem0: A Scalable Memory Architecture

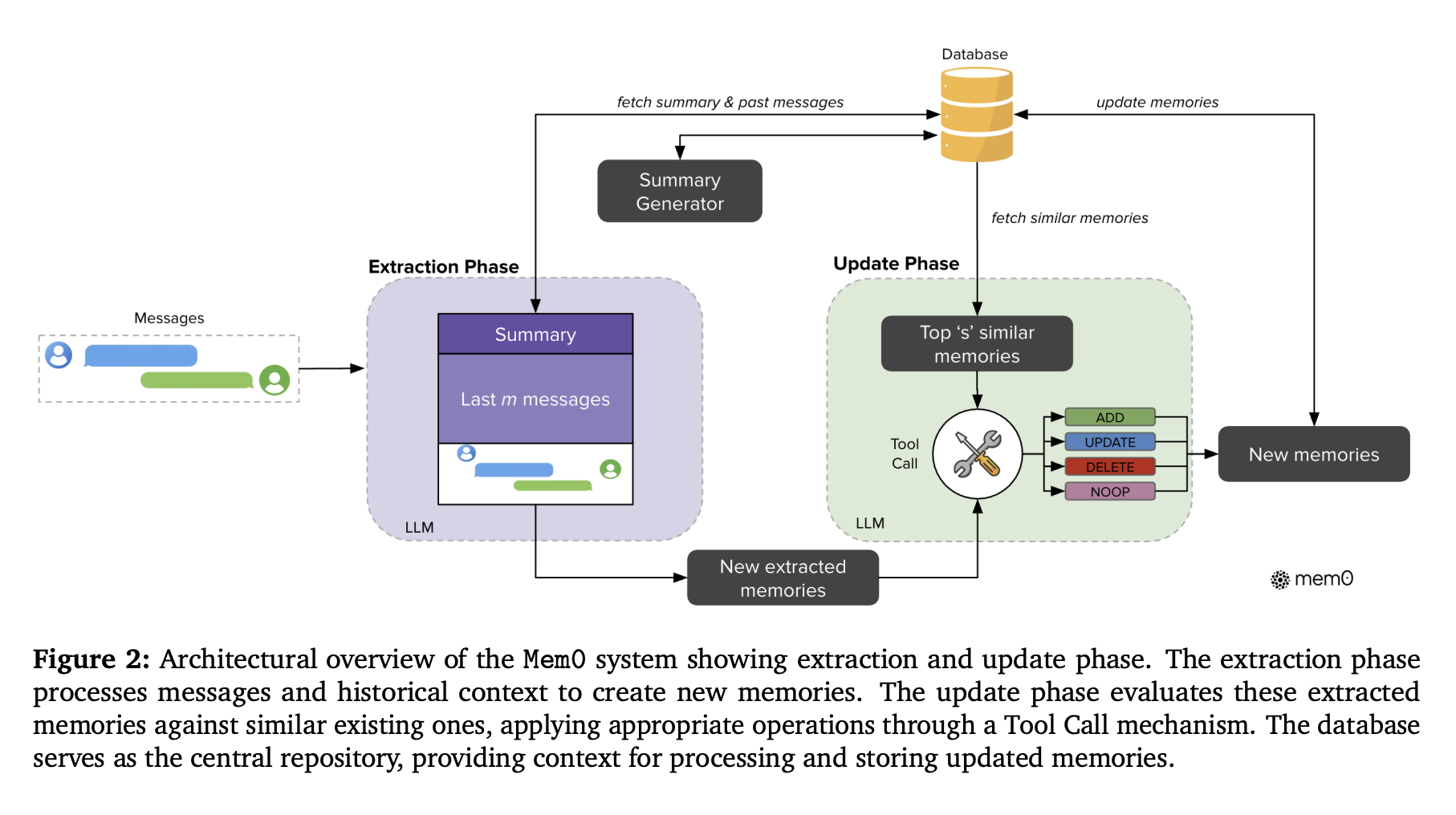

Mem0 introduces a dynamic mechanism to extract, consolidate, and retrieve salient information from ongoing conversations. It operates in two stages:

- Fact Extraction: The model processes pairs of messages (user questions and assistant responses) along with summaries of recent conversations. A language model extracts salient facts from this input.

- Memory Management: Extracted facts are compared with existing memories in a vector database. A decision mechanism, referred to as a 'tool call', determines whether to add, update, delete, or ignore each fact.

Graph-Enhanced Variant: Mem0g

Mem0g extends the base system by structuring information in relational graph formats. Entities (e.g., people, cities, preferences) become nodes, and relationships (e.g., "lives in", "prefers") become edges. This structured format supports complex reasoning across interconnected facts, enhancing the model's ability to trace relational paths across sessions.

Performance and Efficiency

Mem0 has demonstrated significant improvements over existing memory systems:

- Accuracy: Mem0 achieves a 26% relative improvement in the LLM-as-a-Judge metric over OpenAI, with Mem0g adding an additional 2% gain.

- Latency: Mem0 attains a 91% lower p95 latency compared to full-context methods.

- Cost Efficiency: Mem0 saves more than 90% in token costs, making it highly practical for production use cases.

Applications

Mem0 is particularly suited for AI assistants in tutoring, healthcare, and enterprise settings where continuity of memory is essential. Its ability to handle multi-session dialogues efficiently makes it a reliable choice for long-term conversational coherence.

For more detailed insights, you can refer to the research paper on arXiv.