bigsweetpotatostudio_hyperchat

by bigsweetpotatostudioHyperChat MCP Server: A Multi-LLM Chat Client with Productivity Tools

Overview

HyperChat is an open-source chat client designed to provide the best chat experience by leveraging APIs from various Large Language Models (LLMs). It fully supports the Model Context Protocol (MCP) and includes productivity tools built on native Agents. HyperChat is compatible with multiple platforms, including Windows, macOS, and Linux, and offers a user-friendly interface for managing and interacting with LLMs.

Key Features

- Multi-Platform Support: Runs on Windows, macOS, and Linux.

- Multi-LLM Integration: Supports OpenAI, Claude (via OpenRouter), Qwen, Deepseek, GLM, and Ollama.

- MCP Plugin Marketplace: Built-in marketplace for MCP plugins with one-click installation.

- Third-Party MCP Support: Manual installation of third-party MCPs by specifying

command,args, andenv. - Productivity Tools: Includes tools for managing resources, prompts, and scheduled tasks.

- Dark Mode: Supports dark mode for better user experience.

- Multi-Language Support: Available in both English and Chinese.

- Rendering Capabilities: Supports rendering of

Artifacts,SVG, andHTML. - WebDAV Syncing: Enables synchronization of data via WebDAV.

- RAG Integration: Adds Retrieval-Augmented Generation (RAG) based on MCP knowledge bases.

- ChatSpace Concept: Supports multiple simultaneous conversations.

Demo

You can try the HyperChat demo on Docker: HyperChat Demo

Installation

Command Line Execution

npx -y @dadigua/hyper-chat

Default port: 16100, password: 123456. Access via http://localhost:16100/123456/

Docker

docker pull dadigua/hyperchat-mini:latest

Prerequisites

- uv & nodejs: Ensure you have

uvandnodejsinstalled on your system.

Installing uv

# MacOS

brew install uv

# Windows

winget install --id=astral-sh.uv -e

Installing nodejs

# MacOS

brew install node

# Windows

winget install OpenJS.NodeJS.LTS

Usage

- Configure APIKEY: Ensure your LLM service is compatible with OpenAI-style APIs.

- Run HyperChat: Use the command line or Docker to start the server.

- Access the Web Interface: Open your browser and navigate to the provided URL.

Development

To contribute to HyperChat, follow these steps:

cd electron && npm install

cd web && pnpm install

npm install

npm run dev

Examples

Calling Shell MCP

Scheduled Task List

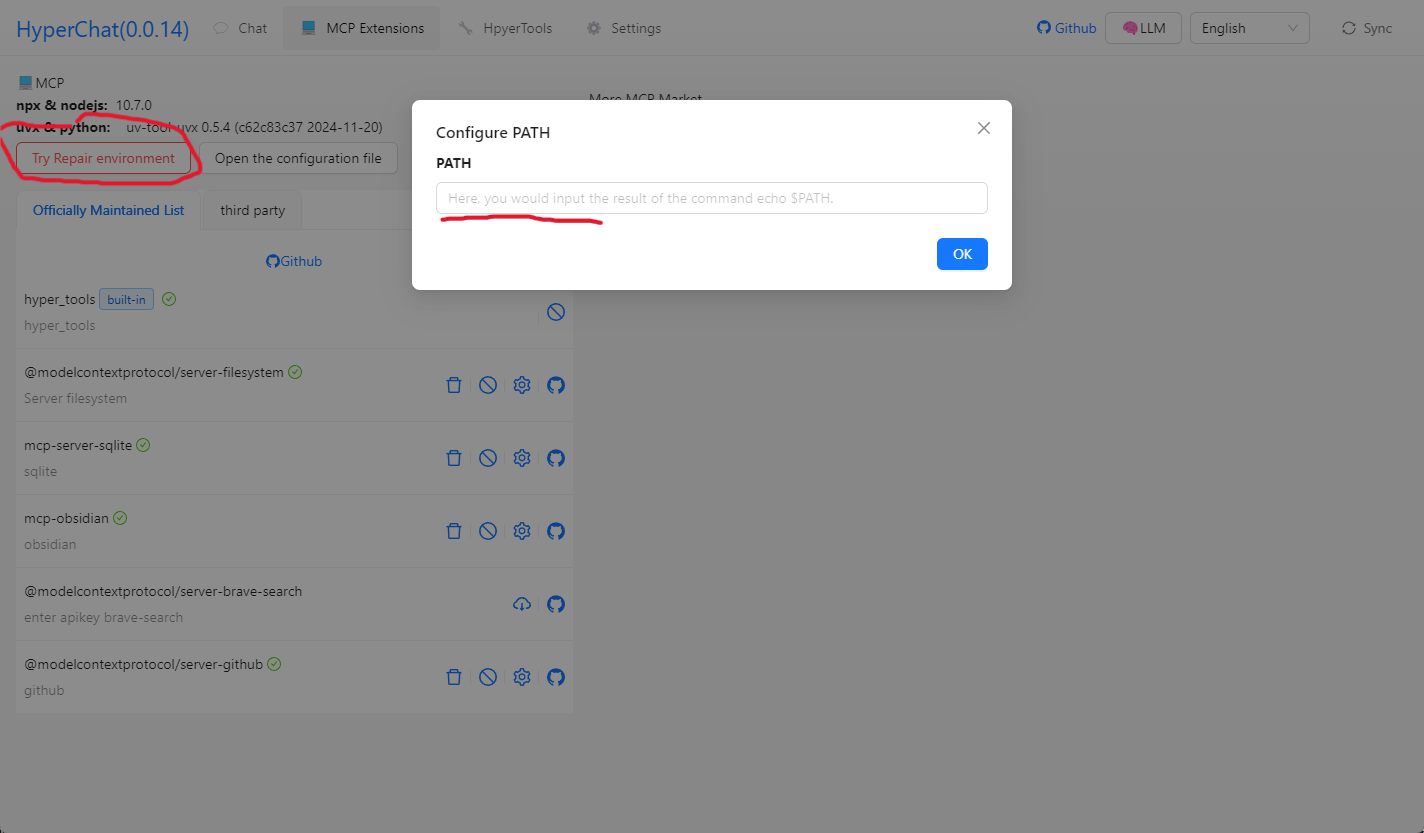

MCP Marketplace

HTML Rendering

Disclaimer

This project is intended for learning and communication purposes only. Any actions taken using this project, such as web scraping, are the responsibility of the user and not the developers.

About

HyperChat is developed by BigSweetPotatoStudio and is available under an open-source license. For more information, visit the GitHub repository.

Topics

License

View the license for more details.

Contributors

Languages

- JavaScript 72.2%

- TypeScript 25.9%

- CSS 1.6%

- Other 0.3%