galaxyllmci_lyraios

by GalaxyLLMCILYRAI Multi-AI Agent Operating System

Overview & Technical Foundation

LYRAI is a Model Context Protocol (MCP) operating system for multi-AI AGENTs designed to extend the functionality of AI applications by enabling them to interact with financial networks and blockchain public chains. The server offers a range of advanced AI assistants, including blockchain public chain operations (SOLANA, ETH, etc.), fintech market analysis, and learning systems for the education sector.

Welcome to check out the demo of our LYRA MCP-OS!

Core Innovations & Differentiated Value

LYRAIOS aims to create the next generation AI Agent operating system with technological breakthroughs in three dimensions:

- Open Protocol Architecture: Modular integration protocol supporting plug-and-play third-party tools/services.

- Multi-Agent Collaboration Engine: Distributed task orchestration system enabling dynamic multi-agent collaboration.

- Cross-Platform Runtime Environment: Cross-terminal AI runtime environment for smooth migration from personal assistants to enterprise digital employees.

For detailed architecture information, see the Architecture Documentation.

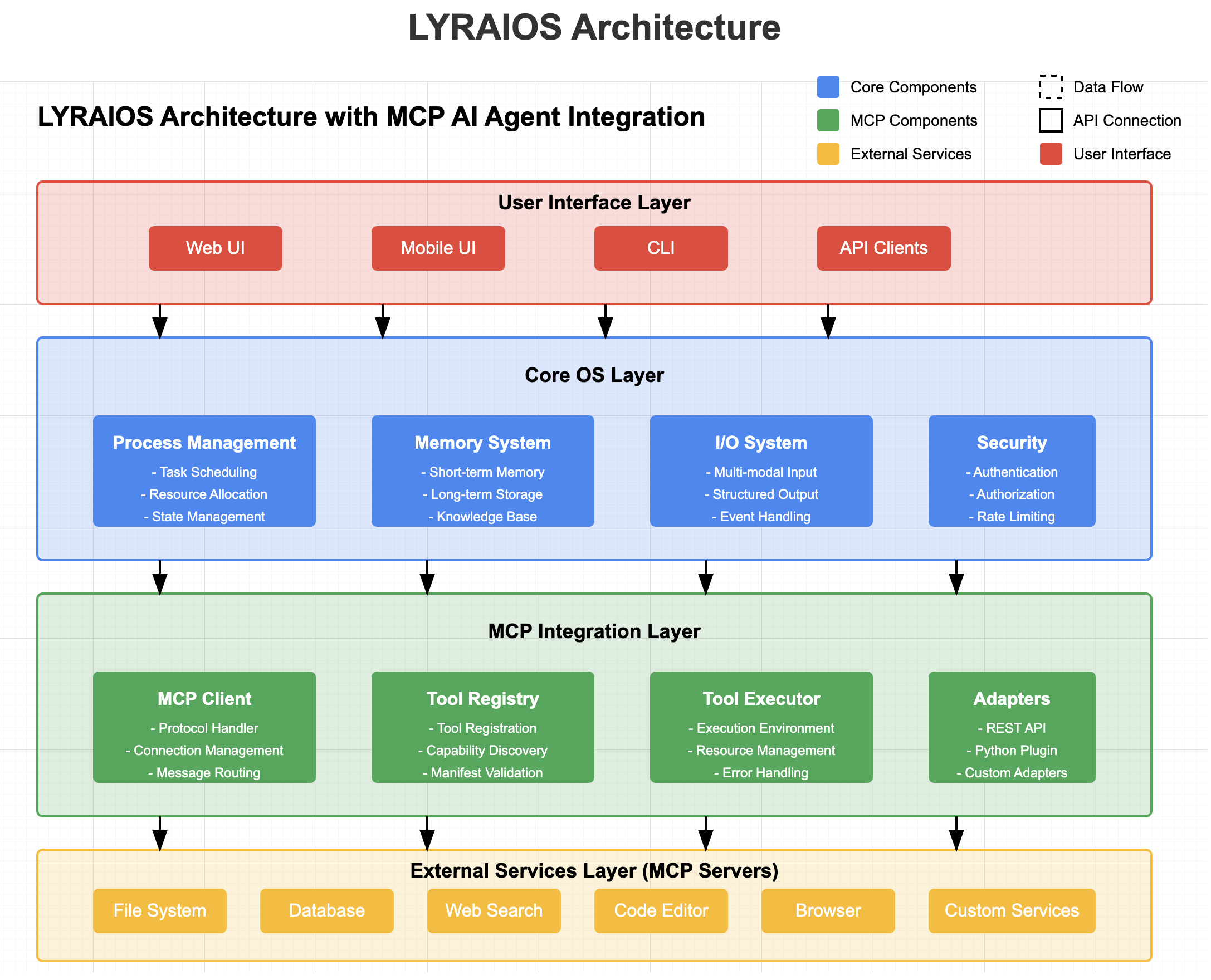

System Architecture

LYRAIOS adopts a layered architecture design, including the user interface layer, core OS layer, MCP integration layer, and external services layer.

User Interface Layer

Provides multiple interaction modes:

- Web UI: Based on Streamlit.

- Mobile UI: Mobile adaptation interface.

- CLI: Command line interface.

- API Clients: API interfaces for third-party integration.

Core OS Layer

Implements basic functions of the AI operating system:

- Process Management: Task scheduling, resource allocation, state management.

- Memory System: Short-term memory, long-term storage, knowledge base.

- I/O System: Multi-modal input, structured output, event handling.

- Security & Access Control: Authentication, authorization, rate limiting.

MCP Integration Layer

Achieves seamless integration with external services through the Model Context Protocol:

- MCP Client: Protocol handler, connection management, message routing.

- Tool Registry: Tool registration, capability discovery, manifest validation.

- Tool Executor: Execution environment, resource management, error handling.

- Adapters: REST API, Python plugin, custom adapters.

External Services Layer

Includes various services integrated through the MCP protocol:

- File System: File read and write capabilities.

- Database: Data storage and query capabilities.

- Web Search: Internet search capabilities.

- Code Editor: Code editing and execution capabilities.

- Browser: Web browsing and interaction capabilities.

- Custom Services: Other custom services integration.

Tool Integration Protocol

The Tool Integration Protocol is a key component of LYRAIOS's Open Protocol Architecture.

Key Features

- Standardized Tool Manifest: Define tools using a JSON schema.

- Pluggable Adapter System: Support for different tool types.

- Secure Execution Environment: Controlled environment with resource limits.

- Versioning and Dependency Management: Track tool versions and dependencies.

- Monitoring and Logging: Comprehensive logging of tool execution.

Getting Started with Tool Integration

- Define Tool Manifest: Create a JSON file describing your tool's capabilities.

- Implement Tool: Develop the tool functionality according to the protocol.

- Register Tool: Use the API to register your tool with LYRAIOS.

- Use Tool: Your tool is now available for use by LYRAIOS agents.

For examples and detailed documentation, see the Tool Integration Guide.

MCP Protocol Overview

Model Context Protocol (MCP) is a client-server architecture protocol for connecting LLM applications and integrations.

MCP Function Support

- Resources: Allow attaching local files and data.

- Prompts: Support prompt templates.

- Tools: Integrate to execute commands and scripts.

- Sampling: Support sampling functions (planned).

- Roots: Support root directory functions (planned).

Data Flow

User Request Processing Flow

- User sends request through the interface layer.

- Core OS layer receives the request and processes it.

- If external tool support is needed, the request is forwarded to the MCP integration layer.

- MCP client connects to the corresponding MCP server.

- External service executes the request and returns the result.

- The result is returned to the user through each layer.

Tool Execution Flow

- AI Agent determines that a specific tool is needed.

- Tool registry looks up tool definition and capabilities.

- Tool executor prepares execution environment.

- Adapter converts request to tool-understandable format.

- Tool executes and returns the result.

- The result is returned to the AI Agent for processing.

Setup Workspace

# Clone the repo

git clone https://github.com/GalaxyLLMCI/lyraios

cd lyraios

# Create + activate a virtual env

python3 -m venv aienv

source aienv/bin/activate

# Install phidata

pip install 'phidata[aws]'

# Setup workspace

phi ws setup

# Copy example secrets

cp workspace/example_secrets workspace/secrets

# Create .env file

cp example.env .env

# Run Lyraios locally

phi ws up

# Open [localhost:8501](http://localhost:8501) to view the Streamlit App.

# Stop Lyraios locally

phi ws down

Run Lyraios Locally

- Install docker desktop.

- Export credentials:

export OPENAI_API_KEY=sk-***

# To use Exa for research, export your EXA_API_KEY (get it from [here](https://dashboard.exa.ai/api-keys))

export EXA_API_KEY=xxx

# To use Gemini for research, export your GOOGLE_API_KEY (get it from [here](https://console.cloud.google.com/apis/api/generativelanguage.googleapis.com/overview?project=lyraios))

export GOOGLE_API_KEY=xxx

# OR set them in the `.env` file

OPENAI_API_KEY=xxx

EXA_API_KEY=xxx

GOOGLE_API_KEY=xxx

# Start the workspace using:

phi ws up

# Open [localhost:8501](http://localhost:8501) to view the Streamlit App.

# Stop the workspace using:

phi ws down

API Documentation

REST API Endpoints

Assistant API

POST /api/v1/assistant/chat: Process chat messages with the AI assistant.

Health Check

GET /api/v1/health: Monitor system health status.

API Documentation

- Interactive API documentation available at

/docs. - ReDoc alternative documentation at

/redoc. - OpenAPI specification at

/openapi.json.

Development Guide

Project Structure

lyraios/

├── ai/ # AI core functionality

│ ├── assistants.py # Assistant implementations

│ ├── llm/ # LLM integration

│ └── tools/ # AI tools implementations

├── app/ # Main application

│ ├── components/ # UI components

│ ├── config/ # Application configuration

│ ├── db/ # Database models and storage

│ ├── styles/ # UI styling

│ ├── utils/ # Utility functions

│ └── main.py # Main application entry point

├── assets/ # Static assets like images

├── data/ # Data storage

├── tests/ # Test suite

├── workspace/ # Workspace configuration

│ ├── dev_resources/ # Development resources

│ ├── settings.py # Workspace settings

│ └── secrets/ # Secret configuration (gitignored)

├── docker/ # Docker configuration

├── scripts/ # Utility scripts

├── .env # Environment variables

├── requirements.txt # Python dependencies

└── README.md # Project documentation

Environment Configuration

- Environment Variables Setup

# Copy the example .env file

cp example.env .env

# Required environment variables

EXA_API_KEY=your_exa_api_key_here # Get from https://dashboard.exa.ai/api-keys

OPENAI_API_KEY=your_openai_api_key_here # Get from OpenAI dashboard

OPENAI_BASE_URL=your_openai_base_url # Optional: Custom OpenAI API endpoint

# OpenAI Model Configuration

OPENAI_CHAT_MODEL=gpt-4-turbo-preview # Default chat model

OPENAI_VISION_MODEL=gpt-4-vision-preview # Model for vision tasks

OPENAI_EMBEDDING_MODEL=text-embedding-3-small # Model for embeddings

# Optional configuration

STREAMLIT_SERVER_PORT=8501 # Default Streamlit port

API_SERVER_PORT=8000 # Default FastAPI port

- Streamlit Configuration

# Create Streamlit config directory

mkdir -p ~/.streamlit

# Create config.toml to disable usage statistics (optional)

cat > ~/.streamlit/config.toml << EOL

[browser]

gatherUsageStats = false

EOL

Development Scripts

- Using dev.py Script

# Run both frontend and backend

python -m scripts.dev run

# Run only frontend

python -m scripts.dev run --no-backend

# Run only backend

python -m scripts.dev run --no-frontend

# Run with custom ports

python -m scripts.dev run --frontend-port 8502 --backend-port 8001

- Manual Service Start

# Start Streamlit frontend

streamlit run app/app.py

# Start FastAPI backend

uvicorn api.main:app --reload

Dependencies Management

- Core Dependencies

# Install production dependencies

pip install -r requirements.txt

# Install development dependencies

pip install -r requirements-dev.txt

# Install the project in editable mode

pip install -e .

- Additional Tools

# Install python-dotenv for environment management

pip install python-dotenv

# Install development tools

pip install black isort mypy pytest

Development Best Practices

-

Code Style

-

Follow PEP 8 guidelines.

- Use type hints.

- Write docstrings for functions and classes.

- Use black for code formatting.

-

Use isort for import sorting.

-

Testing

# Run tests

pytest

# Run tests with coverage

pytest --cov=app tests/

- Pre-commit Hooks

# Install pre-commit hooks

pre-commit install

# Run manually

pre-commit run --all-files

Deployment Guide

Docker Deployment

- Development Environment

# Build development image

docker build -f docker/Dockerfile.dev -t lyraios:dev .

# Run development container

docker-compose -f docker-compose.dev.yml up

- Production Environment

# Build production image

docker build -f docker/Dockerfile.prod -t lyraios:prod .

# Run production container

docker-compose -f docker-compose.prod.yml up -d

Configuration Options

- Environment Variables

# Application Settings

DEBUG=false

LOG_LEVEL=INFO

ALLOWED_HOSTS=example.com,api.example.com

# AI Settings

AI_MODEL=gpt-4

AI_TEMPERATURE=0.7

AI_MAX_TOKENS=1000

# Database Settings

DATABASE_URL=postgresql://user:pass@localhost:5432/dbname

-

Scaling Options

-

Configure worker processes via

GUNICORN_WORKERS. - Adjust memory limits via

MEMORY_LIMIT. - Set concurrency via

MAX_CONCURRENT_REQUESTS.

Monitoring and Maintenance

-

Health Checks

-

Monitor

/healthendpoint. - Check system metrics via Prometheus endpoints.

-

Review logs in

/var/log/lyraios/. -

Backup and Recovery

# Backup database

python scripts/backup_db.py

# Restore from backup

python scripts/restore_db.py --backup-file backup.sql

-

Troubleshooting

-

Check application logs.

- Verify environment variables.

- Ensure database connectivity.

- Monitor system resources.

Database Configuration

The system supports both SQLite and PostgreSQL databases:

- SQLite (Default)

# SQLite Configuration

DATABASE_TYPE=sqlite

DATABASE_PATH=data/lyraios.db

- PostgreSQL

# PostgreSQL Configuration

DATABASE_TYPE=postgres

POSTGRES_HOST=localhost

POSTGRES_PORT=5432

POSTGRES_DB=lyraios

POSTGRES_USER=postgres

POSTGRES_PASSWORD=your_password

The system will automatically use SQLite if no PostgreSQL configuration is provided.

Contributing

We welcome contributions! Please see the CONTRIBUTING.md file for details.

License

This project is licensed under the Apache License 2.0 - see the LICENSE file for details.

About

LYRAI is a Model Context Protocol (MCP) operating system for multi-AI AGENTs designed to extend the functionality of AI applications by enabling them to interact with financial networks and blockchain public chains. The server offers a range of advanced AI assistants, including blockchain public chain operations (SOLANA, ETH, BSC, etc.).

Resources

License

Stars

Watchers

Forks

No releases published

No packages published

Languages

- Python 58.0%

- TypeScript 35.6%

- CSS 4.6%

- Shell 1.6%

- Other 0.2%