prajwalshettydev_unrealgenaisupport

by prajwalshettydevLicense

You signed in with another tab or window. Reload

to refresh your session. You signed out in another tab or window. Reload

to refresh your session. You switched accounts on another tab or window. Reload

to refresh your session. Dismiss alert

prajwalshettydev / UnrealGenAISupport Public

- Notifications

You must be signed in to change notification settings

UnrealMCP is here!! Automatic blueprint and scene generation from AI!! An Unreal Engine plugin for LLM/GenAI models & MCP UE5 server. Supports Claude Desktop App & Cursor, also includes OpenAI's GPT4o, DeepseekR1 and Claude Sonnet 3.7 APIs with plans to add Gemini, Grok 3, audio & realtime APIs soon.

github.com/prajwalshettydev/UnrealGenAISupport/wiki

License

79 stars

6 forks

Branches

Tags

Activity

Notifications

You must be signed in to change notification settings

prajwalshettydev/UnrealGenAISupport

main

Go to file

Code

Folders and files

| Name | | Name | Last commit message | Last commit date |

| --- | --- | --- | --- |

| Latest commit

-------------

prajwalshettydev

Minor Readme

Mar 24, 2025

d94a78c

· Mar 24, 2025

History

-------

56 Commits

| | |

| Config | | Config | fix

#5

and also readme update for blueprints and added blueprint exam… | Mar 20, 2025 |

| Content | | Content | minor fixes | Mar 23, 2025 |

| Docs | | Docs | readme update | Mar 24, 2025 |

| Examples/Schemas | | Examples/Schemas | Openai Structured Outputs, JSON Schema suport | Jan 2, 2025 |

| Resources | | Resources | first commit | Nov 17, 2024 |

| Source/GenerativeAISupport | | Source/GenerativeAISupport | minor fixes | Mar 23, 2025 |

| .gitignore | | .gitignore | first commit | Nov 17, 2024 |

| GenerativeAISupport.uplugin | | GenerativeAISupport.uplugin | first commit | Nov 17, 2024 |

| LICENSE | | LICENSE | unreal mcp now supports class creation, compile, add var, blurpint fu… | Mar 19, 2025 |

| README.md | | README.md | Minor Readme | Mar 24, 2025 |

| View all files | | |

Repository files navigation

Unreal Engine Generative AI Support Plugin

Usage Examples:

MCP Example:

Claude spawning scene objects and controlling their transformations and materials, generating blueprints, functions, variables, adding components, running python scripts etc.

API Example:

A project called become human, where NPCs are OpenAI agentic instances. Built using this plugin.

Warning

This plugin is still under rapid development.

- Do not use it in production environments. ⚠️

- Do not use it without version control. ⚠️

A stable version will be released soon. 🚀🔥

Every month, hundreds of new AI models are released by various organizations, making it hard to keep up with the latest advancements.

The Unreal Engine Generative AI Support Plugin allows you to focus on game development without worrying about the LLM/GenAI integration layer.

Currently integrating Model Control Protocol (MCP) with Unreal Engine 5.5.

This project aims to build a long-term support (LTS) plugin for various cutting-edge LLM/GenAI models and foster a community around it. It currently includes OpenAI's GPT-4o, Deepseek R1, Claude Sonnet 3.7 and GPT-4o-mini for Unreal Engine 5.1 or higher, with plans to add , real-time APIs, Gemini, MCP, and Grok 3 APIs soon. The plugin will focus exclusively on APIs useful for game development, evals and interactive experiences. All suggestions and contributions are welcome. The plugin can also be used for setting up new evals and ways to compare models in game battlefields.

Current Progress:

LLM/GenAI API Support:

- OpenAI API Support:

- OpenAI Chat API ✅ (models-ref)

gpt-4o,gpt-4o-miniModel ✅gpt-4.5-previewModel 🛠️o1-mini,o1,o1-proModel 🚧o3-miniModel 🛠️

- OpenAI DALL-E API ❌ (Until new generation models are released)

- OpenAI Vision API 🚧

- OpenAI Realtime API 🛠️

gpt-4o-realtime-previewgpt-4o-mini-realtime-previewModel 🛠️

- OpenAI Structured Outputs ✅

- OpenAI Whisper API 🚧

- OpenAI Chat API ✅ (models-ref)

- Anthropic Claude API Support:

- Claude Chat API ✅

claude-3-7-sonnet-latestModel ✅claude-3-5-sonnetModel ✅claude-3-5-haiku-latestModel ✅claude-3-opus-latestModel ✅

- Claude Vision API 🚧

- Claude Chat API ✅

- XAI (Grok 3) API Support:

- XAI Chat Completions API 🚧

grok-betaModel 🚧grok-betaStreaming API 🚧

- XAI Image API 🚧

- XAI Chat Completions API 🚧

- Google Gemini API Support:

- Gemini Chat API 🚧🤝

gemini-2.0-flash-lite,gemini-2.0-flashgemini-1.5-flashModel 🚧🤝

- Gemini Imagen API: 🚧

imagen-3.0-generate-002Model 🚧

- Gemini Chat API 🚧🤝

- Meta AI API Support:

- Llama Chat API ❌ (Until new generation models are released)

llama3.3-70bModel ❌llama3.1-8bModel ❌

- Local Llama API 🚧🤝

- Llama Chat API ❌ (Until new generation models are released)

- Deepseek API Support:

- Deepseek Chat API ✅

deepseek-chat(DeepSeek-V3) Model ✅

- Deepseek Reasoning API, R1 ✅

deepseek-reasoning-r1Model ✅deepseek-reasoning-r1CoT Streaming ❌

- Independently Hosted Deepseek Models 🚧

- Deepseek Chat API ✅

- Baidu API Support:

- Baidu Chat API 🚧

baidu-chatModel 🚧

- Baidu Chat API 🚧

- 3D generative model APIs:

- TripoSR by StabilityAI 🚧

- Plugin Documentation 🛠️🤝

-

Plugin Example Project 🛠️ here

-

Version Control Support

- Perforce Support 🚧

- Git Submodule Support ✅

- LTS Branching 🚧

- Stable Branch with Bug Fixes 🚧

- Dedicated Contributor for LTS 🚧

- Lightweight Plugin (In Builds)

- No External Dependencies ✅

- Build Flags to enable/disable APIs 🚧

- Submodules per API Organization 🚧

- Exclude MCP from build 🚧

- Testing

- Automated Testing 🚧

- Different Platforms 🚧🤝

- Different Engine Versions 🚧🤝

Unreal MCP (Model Control Protocol):

- Clients Support ✅

- Claude Desktop App Support ✅

- Cursor IDE Support ✅

- OpenAI Operator API Support 🚧

- Blueprints Auto Generation 🛠️

- Creating new blueprint of types ✅

- Adding new functions, function/blueprint variables ✅

- Adding nodes and connections 🛠️ (buggy)

- Advanced Blueprints Generation 🛠️

- Level/Scene Control for LLMs 🛠️

- Spawning Objects and Shapes ✅

- Moving, rotating and scaling objects ✅

- Changing materials and color ✅

- Advanced scene features 🛠️

- Generative AI:

- Prompt to 3D model fetch and spawn 🛠️

- Control:

- Ability to run Python scripts ✅

- Ability to run Console Commands ✅

- UI:

- Widgets generation 🛠️

- UI Blueprint generation 🛠️

- Project Files:

- Create/Edit project files/folders ️✅

- Delete existing project files ❌

- Others:

- Project Cleanup 🛠️

Where,

- ✅ - Completed

- 🛠️ - In Progress

- 🚧 - Planned

- 🤝 - Need Contributors

- ❌ - Won't Support For Now

Table of Contents

Setting API Keys:

Note

There is no need to set the API key for testing the MCP features in Claude app. Anthropic key only needed for Claude API.

For Editor:

Set the environment variable PS_<ORGNAME> to your API key.

For Windows:

setx PS_<ORGNAME> "your api key"

For Linux/MacOS:

-

Run the following command in your terminal, replacing yourkey with your API key.

shell echo "export PS_<ORGNAME>='yourkey'" >> ~/.zshrc -

Update the shell with the new variable:

shell source ~/.zshrc

PS: Don't forget to restart the Editor and ALSO the connected IDE after setting the environment variable.

Where <ORGNAME> can be: PS_OPENAIAPIKEY, PS_DEEPSEEKAPIKEY, PS_ANTHROPICAPIKEY, PS_METAAPIKEY, PS_GOOGLEAPIKEY etc.

For Packaged Builds:

Storing API keys in packaged builds is a security risk. This is what the OpenAI API documentation says about it:

"Exposing your OpenAI API key in client-side environments like browsers or mobile apps allows malicious users to take that key and make requests on your behalf – which may lead to unexpected charges or compromise of certain account data. Requests should always be routed through your own backend server where you can keep your API key secure."

Read more about it here

.

For test builds you can call the GenSecureKey::SetGenAIApiKeyRuntime either in c++ or blueprints function with your API key in the packaged build.

Setting up MCP:

Note

If your project only uses the LLM APIs and not the MCP, you can skip this section.

Caution

Discalimer: If you are using the MCP feature of the plugin, it will directly let the Claude Desktop App control your Unreal Engine project. Make sure you are aware of the security risks and only use it in a controlled environment.

Please backup your project before using the MCP feature and use version control to track changes.

1. Install any one of the below clients:

2. Setup the mcp config json:

For Claude Desktop App:

claude_desktop_config.json file in Claude Desktop App's installation directory. (might ask claude where its located for your platform!) The file will look something like this:

{

"mcpServers": {

"unreal-handshake": {

"command": "python",

"args": ["<your_project_directoy_path>/Plugins/GenerativeAISupport/Content/Python/mcp_server.py"],

"env": {

"UNREAL_HOST": "localhost",

"UNREAL_PORT": "9877"

}

}

}

}

For Cursor IDE:

.cursor/mcp.json file in your project directory. The file will look something like this:

{

"mcpServers": {

"unreal-handshake": {

"command": "python",

"args": ["<your_project_directoy_path>/Plugins/GenerativeAISupport/Content/Python/mcp_server.py"],

"env": {

"UNREAL_HOST": "localhost",

"UNREAL_PORT": "9877"

}

}

}

}

3. Install MCP[CLI] from with either pip or cv.

pip install mcp[cli]

4. Enable python plugin in Unreal Engine. (Edit -> Plugins -> Python Editor Script Plugin)

Adding the plugin to your project:

With Git:

-

Add the Plugin Repository as a Submodule in your project's repository.

batchfile git submodule add https://github.com/prajwalshettydev/UnrealGenAISupport Plugins/GenerativeAISupport -

Regenerate Project Files: Right-click your .uproject file and select Generate Visual Studio project files.

-

Enable the Plugin in Unreal Editor: Open your project in Unreal Editor. Go to Edit > Plugins. Search for the Plugin in the list and enable it.

-

For Unreal C++ Projects, include the Plugin's module in your project's Build.cs file:

c PrivateDependencyModuleNames.AddRange(new string[] { "GenerativeAISupport" });

With Perforce:

Still in development..

With Unreal Marketplace:

Coming soon, for free, in the Unreal Engine Marketplace.

Fetching the Latest Plugin Changes:

With Git:

you can pull the latest changes with:

cd Plugins/GenerativeAISupport

git pull origin main

Or update all submodules in the project:

git submodule update --recursive --remote

With Perforce:

Still in development..

Usage:

There is a example Unreal project that already implements the plugin. You can find it here

.

OpenAI:

Currently the plugin supports Chat and Structured Outputs from OpenAI API. Both for C++ and Blueprints. Tested models are gpt-4o, gpt-4o-mini, gpt-4.5, o1-mini, o1, o3-mini-high.

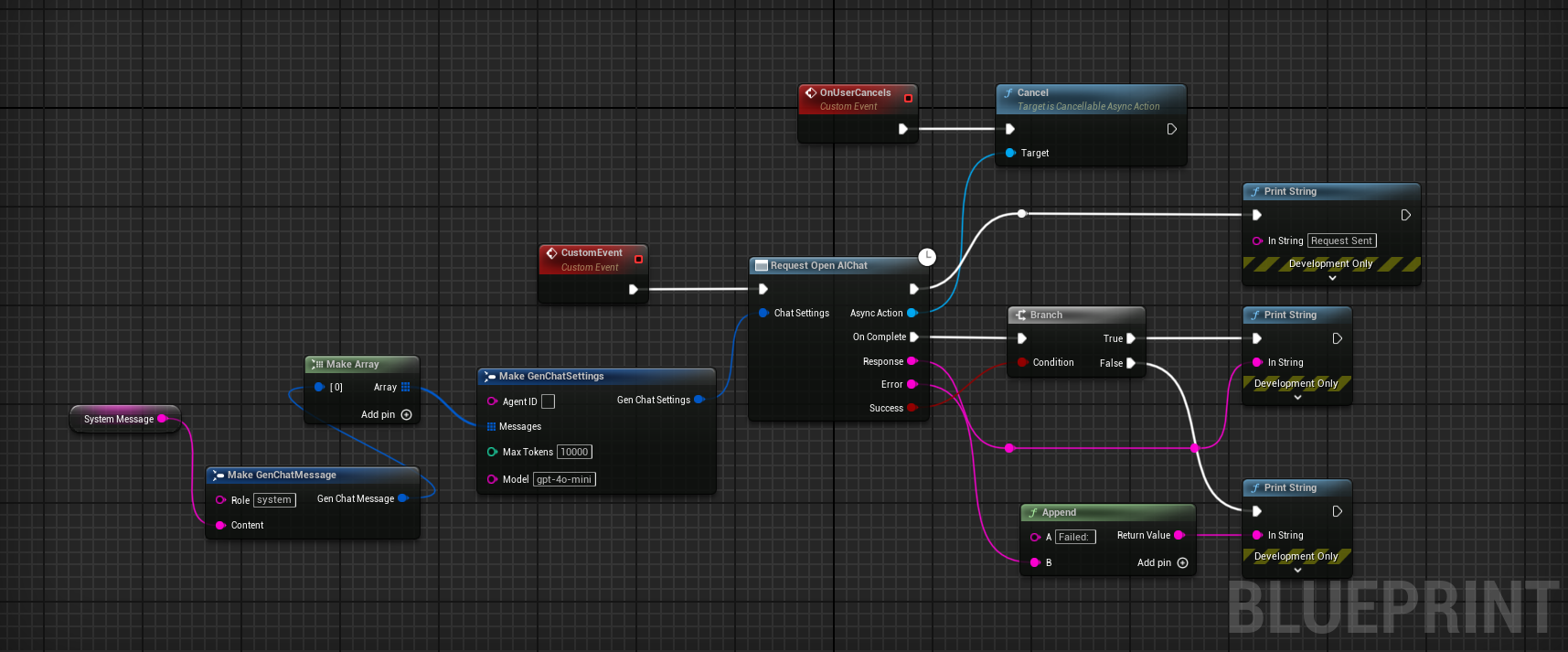

1. Chat:

C++ Example:

void SomeDebugSubsystem::CallGPT(const FString& Prompt,

const TFunction<void(const FString&, const FString&, bool)>& Callback)

{

FGenChatSettings ChatSettings;

ChatSettings.Model = TEXT("gpt-4o-mini");

ChatSettings.MaxTokens = 500;

ChatSettings.Messages.Add(FGenChatMessage{ TEXT("system"), Prompt });

FOnChatCompletionResponse OnComplete = FOnChatCompletionResponse::CreateLambda(

[Callback](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

Callback(Response, ErrorMessage, bSuccess);

});

UGenOAIChat::SendChatRequest(ChatSettings, OnComplete);

}

Blueprint Example:

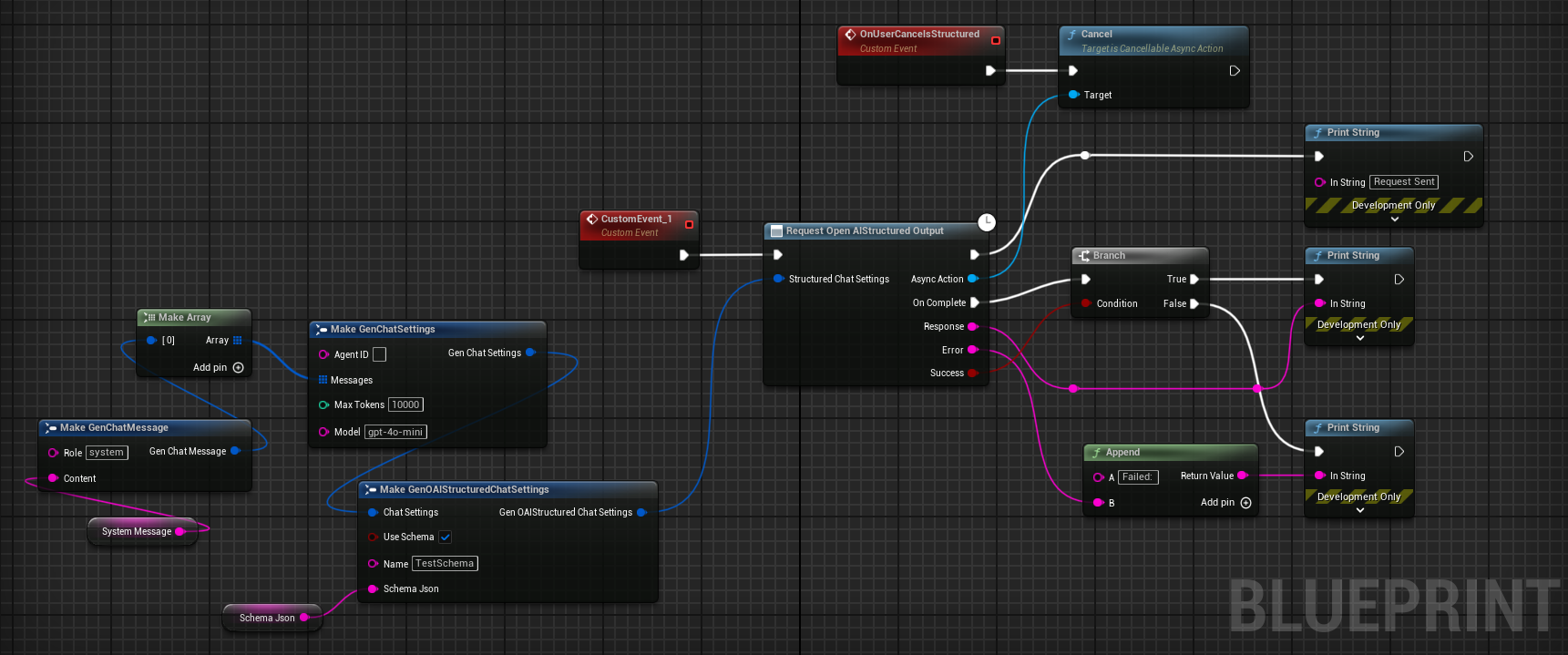

2. Structured Outputs:

C++ Example 1:

Sending a custom schema json directly to function call

FString MySchemaJson = R"({

"type": "object",

"properties": {

"count": {

"type": "integer",

"description": "The total number of users."

},

"users": {

"type": "array",

"items": {

"type": "object",

"properties": {

"name": { "type": "string", "description": "The user's name." },

"heading_to": { "type": "string", "description": "The user's destination." }

},

"required": ["name", "role", "age", "heading_to"]

}

}

},

"required": ["count", "users"]

})";

UGenAISchemaService::RequestStructuredOutput(

TEXT("Generate a list of users and their details"),

MySchemaJson,

[](const FString& Response, const FString& Error, bool Success) {

if (Success)

{

UE_LOG(LogTemp, Log, TEXT("Structured Output: %s"), *Response);

}

else

{

UE_LOG(LogTemp, Error, TEXT("Error: %s"), *Error);

}

}

);

C++ Example 2:

Sending a custom schema json from a file

#include "Misc/FileHelper.h"

#include "Misc/Paths.h"

FString SchemaFilePath = FPaths::Combine(

FPaths::ProjectDir(),

TEXT("Source/:ProjectName/Public/AIPrompts/SomeSchema.json")

);

FString MySchemaJson;

if (FFileHelper::LoadFileToString(MySchemaJson, *SchemaFilePath))

{

UGenAISchemaService::RequestStructuredOutput(

TEXT("Generate a list of users and their details"),

MySchemaJson,

[](const FString& Response, const FString& Error, bool Success) {

if (Success)

{

UE_LOG(LogTemp, Log, TEXT("Structured Output: %s"), *Response);

}

else

{

UE_LOG(LogTemp, Error, TEXT("Error: %s"), *Error);

}

}

);

}

Blueprint Example:

DeepSeek API:

Currently the plugin supports Chat and Reasoning from DeepSeek API. Both for C++ and Blueprints. Points to note:

- System messages are currently mandatory for the reasoning model. API otherwise seems to return null

- Also, from the documentation: "Please note that if the reasoning_content field is included in the sequence of input messages, the API will return a 400 error. Read more about it here

"

Warning

While using the R1 reasoning model, make sure the Unreal's HTTP timeouts are not the default values at 30 seconds. As these API calls can take longer than 30 seconds to respond. Simply setting the HttpRequest->SetTimeout(<N Seconds>); is not enough So the following lines need to be added to your project's DefaultEngine.ini file:

[HTTP]

HttpConnectionTimeout=180

HttpReceiveTimeout=180

1. Chat and Reasoning:

C++ Example:

FGenDSeekChatSettings ReasoningSettings;

ReasoningSettings.Model = EDeepSeekModels::Reasoner; // or EDeepSeekModels::Chat for Chat API

ReasoningSettings.MaxTokens = 100;

ReasoningSettings.Messages.Add(FGenChatMessage{TEXT("system"), TEXT("You are a helpful assistant.")});

ReasoningSettings.Messages.Add(FGenChatMessage{TEXT("user"), TEXT("9.11 and 9.8, which is greater?")});

ReasoningSettings.bStreamResponse = false;

UGenDSeekChat::SendChatRequest(

ReasoningSettings,

FOnDSeekChatCompletionResponse::CreateLambda(

[this](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

if (!UTHelper::IsContextStillValid(this))

{

return;

}

// Log response details regardless of success

UE_LOG(LogTemp, Warning, TEXT("DeepSeek Reasoning Response Received - Success: %d"), bSuccess);

UE_LOG(LogTemp, Warning, TEXT("Response: %s"), *Response);

if (!ErrorMessage.IsEmpty())

{

UE_LOG(LogTemp, Error, TEXT("Error Message: %s"), *ErrorMessage);

}

})

);

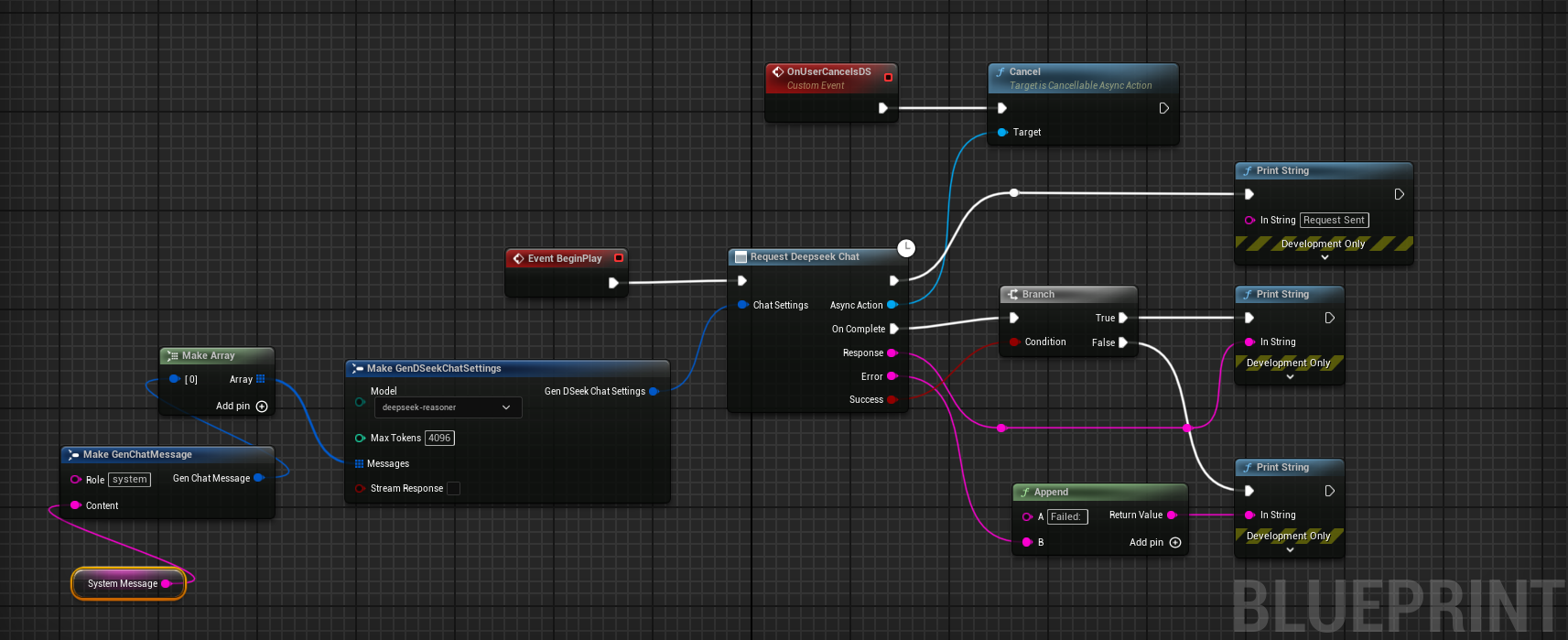

Blueprint Example:

Anthropic API:

Currently the plugin supports Chat from Anthropic API. Both for C++ and Blueprints. Tested models are claude-3-7-sonnet-latest, claude-3-5-sonnet, claude-3-5-haiku-latest, claude-3-opus-latest.

1. Chat:

C++ Example:

// ---- Claude Chat Test ----

FGenClaudeChatSettings ChatSettings;

ChatSettings.Model = EClaudeModels::Claude_3_7_Sonnet; // Use Claude 3.7 Sonnet model

ChatSettings.MaxTokens = 4096;

ChatSettings.Temperature = 0.7f;

ChatSettings.Messages.Add(FGenChatMessage{TEXT("system"), TEXT("You are a helpful assistant.")});

ChatSettings.Messages.Add(FGenChatMessage{TEXT("user"), TEXT("What is the capital of France?")});

UGenClaudeChat::SendChatRequest(

ChatSettings,

FOnClaudeChatCompletionResponse::CreateLambda(

[this](const FString& Response, const FString& ErrorMessage, bool bSuccess)

{

if (!UTHelper::IsContextStillValid(this))

{

return;

}

if (bSuccess)

{

UE_LOG(LogTemp, Warning, TEXT("Claude Chat Response: %s"), *Response);

}

else

{

UE_LOG(LogTemp, Error, TEXT("Claude Chat Error: %s"), *ErrorMessage);

}

})

);

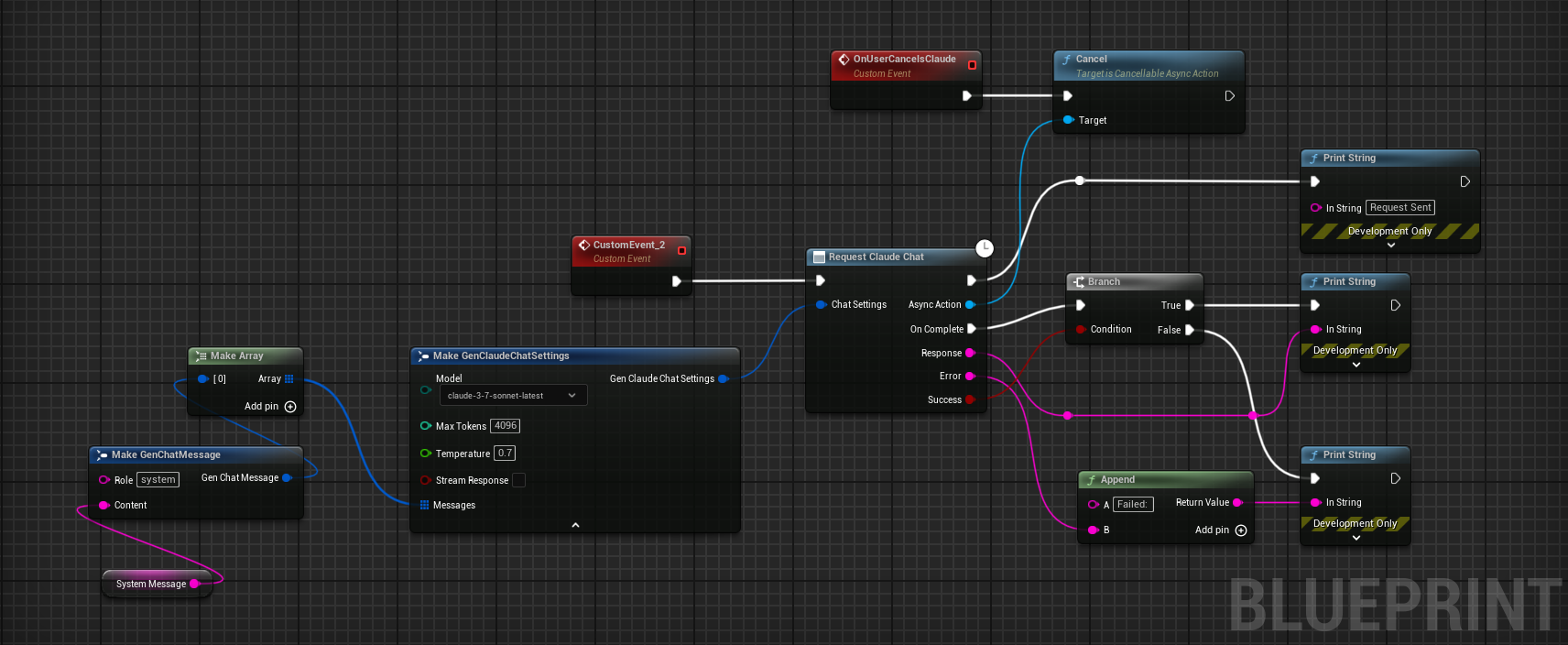

Blueprint Example:

Model Control Protocol (MCP):

This is currently work in progress. The plugin supports various clients like Claude Desktop App, Cursor etc.

Usage:

Running the MCP server:

1. Run the MCP server from the plugin's python directory.

python <your_project_directoy>/Plugins/GenerativeAISupport/Content/Python/mcp_server.py

2. Run the MCP client by opening or restarting the Claude desktop app or Cursor IDE.

3. Open a new Unreal Engine project and run the below python script from the plugin's python directory.

Tools -> Run Python Script -> Select the

Plugins/GenerativeAISupport/Content/Python/unreal_socket_server.pyfile.

4. Now you should be able to prompt the Claude Desktop App to use Unreal Engine.

Known Issues:

- Nodes fail to connect properly with MCP

- No undo redo support for MCP

- No streaming support for Deepseek reasoning model

- No complex material generation support for the create material tool

- Issues with running some llm generated valid python scripts

- When LLM compiles a blueprint no proper error handling in its response

- Issues spawning certain nodes, especially with getters and setters

- Doesn't open the right context window during scene and project files edit.

- Doesn't dock the window properly in the editor for blueprints.

Contribution Guidelines:

Setting up for Development:

- Install

unrealpython package and setup the IDE's python interpreter for proper intellisense.

pip install unreal

More details will be added soon.

Project Structure:

More details will be added soon.

References:

-

Env Var set logic from: OpenAI-Api-Unreal by KellanM

-

MCP Server inspiration from: Blender-MCP by ahujasid

Quick Links:

About

UnrealMCP is here!! Automatic blueprint and scene generation from AI!! An Unreal Engine plugin for LLM/GenAI models & MCP UE5 server. Supports Claude Desktop App & Cursor, also includes OpenAI's GPT4o, DeepseekR1 and Claude Sonnet 3.7 APIs with plans to add Gemini, Grok 3, audio & realtime APIs soon.

github.com/prajwalshettydev/UnrealGenAISupport/wiki

Topics

lightweight

meta

mcp

game-development

gemini

openai

llama

whisper

grok

claude

xai

unreal-engine-plugin

ue5

gpt-4

unreal-engine-5

llm

generative-ai

deepseek

mcp-server

sonnet3-7

Resources

License

Stars

Watchers

Forks

Languages

You can’t perform that action at this time.