All MCP Servers Complete list of MCP server implementations, sorted by stars

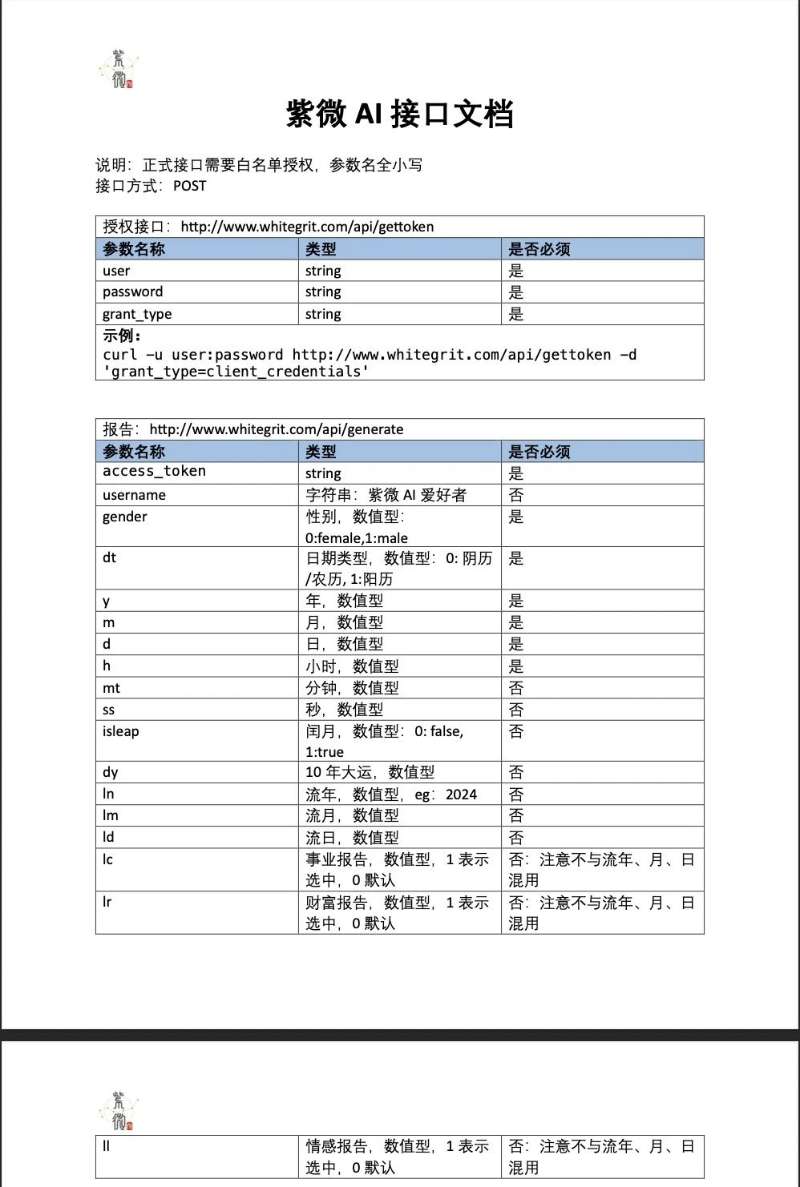

Ziwei AI is an innovative application that leverages the wisdom of traditional Chinese culture, specifically 'Ziwei Dou Shu,' to help users understand themselves and improve life efficiency. It integrates traditional cultural knowledge with modern AI technology, offering features like charting, chart analysis, and interactive reports. The application aims to provide a professional yet accessible tool for users to explore their personality traits, natural talents, and life trends through Ziwei Doushu charts.

This repository hosts configurations and scripts for various MCP (Model Context Protocol) servers, facilitating the integration of external tools with language models such as Claude in Cursor. It includes setups for Firecrawl, Browser Tools, Supabase, Git, and more, enhancing the capabilities of AI-driven workflows.

This project is an MCP server designed to facilitate Unsplash image search integration. It uses the mark3labs/mcp-go library to provide a streamlined interface for searching and retrieving images from Unsplash. The server can be integrated into applications like Cursor, making it easy to add image search capabilities to your projects.

This project leverages the Community n8n MCP Client and the Coolify MCP Server to enable seamless interaction with Coolify infrastructure using the Model Context Protocol (MCP). It provides tools for managing teams, servers, services, applications, and deployments, streamlining infrastructure management with AI-driven automation. The Coolify MCP Server acts as a bridge between Coolify and n8n, facilitating efficient API communication and scalable automation.

This MCP server enables interaction with Unreal Engine instances by providing remote Python execution capabilities. It features Unreal instance management, remote execution modes, and detailed logging and monitoring. The server supports automatic discovery of Unreal nodes, real-time status monitoring, and execution of Python code in both attended and unattended modes.

The MCP Servers and Clients Hub is a centralized repository for discovering and contributing to a wide range of Model Context Protocol (MCP) servers and clients. It provides a comprehensive list of MCP implementations, tools, and integrations across different domains such as AI services, system automation, search, and more. This hub aims to facilitate the development and adoption of MCP by offering a collaborative platform for developers and users.

The Deep Research MCP Server is an agent-based tool designed to provide advanced research capabilities, including web search, PDF and document analysis, image analysis, YouTube transcript retrieval, and archive site search. It leverages HuggingFace's smolagents and requires Python 3.11 or higher, along with API keys for OpenAI, HuggingFace, and SerpAPI. The server is implemented as an MCP server, offering a robust solution for complex research tasks.

The MCP Figma to React Converter is a server that automates the process of converting Figma designs into React components. It leverages the Figma API to fetch designs, extracts components, and generates React code with TypeScript and Tailwind CSS. The server supports various workflows, including fetching Figma projects, extracting components, and generating React component libraries. It also provides tools for enhancing components with accessibility features and supports both stdio and SSE transports for flexibility in deployment.

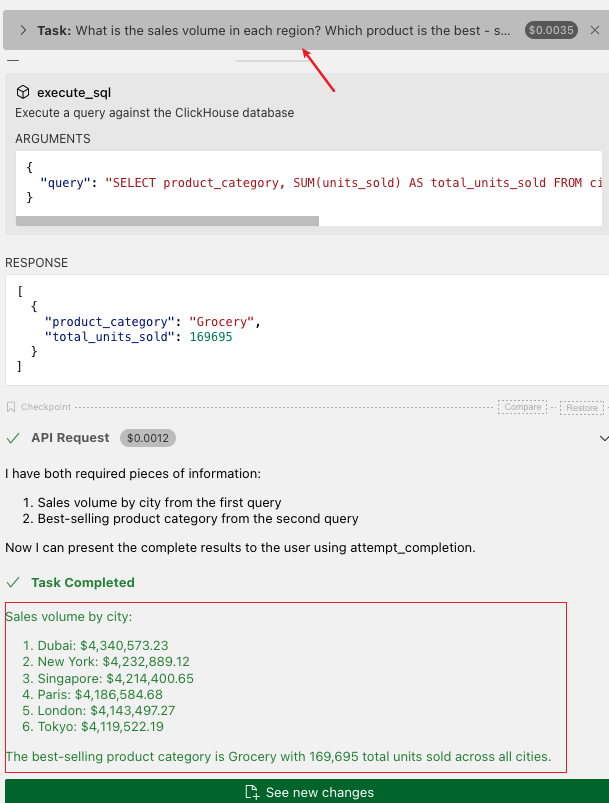

The ClickHouse MCP Server provides AI assistants with a secure and structured way to explore and analyze databases. It enables them to list tables, read data, and execute SQL queries through a controlled interface, ensuring responsible database access. The server can be configured via environment variables or command-line arguments, and it integrates with tools like VSCode and Cline for seamless usage.

Axiom is an AI agent specialized in modern AI frameworks, libraries, and tools. It helps users create AI agents, RAG systems, chatbots, and full-stack development projects through natural language instructions. Built with LangGraph, MCP Docs, Chainlit, and Gemini models, it offers an interactive chat interface, access to multiple documentation sources, and customizable model settings. It also supports Docker for containerized deployment.

This MCP server facilitates reliable interactions between language models (LLM/SLM) and Apache Kafka, including its ecosystem tools like Kafka Connect, Burrow, and Cruise Control. It supports core Kafka APIs, excluding Streams, and provides REST API integrations for Burrow and Cruise Control. The server is designed to enhance the capabilities of language models by enabling them to perform tasks such as consuming, producing, and describing Kafka clusters, topics, and consumer groups.

This project is an Elixir-based implementation of the Model Context Protocol (MCP) server, designed to enable secure interactions between AI models and local or remote resources. It uses Server-Sent Events (SSE) as the transport protocol and includes tools like file listing, message echoing, and weather data retrieval. The server is built with Bandit and Plug, providing a lightweight and efficient solution for MCP-compliant applications.

The Swagger MCP Server is designed to parse Swagger/OpenAPI documents, supporting both v2 and v3 specifications. It generates TypeScript type definitions and API client code for frameworks like Axios, Fetch, and React Query. The server integrates with the Model Context Protocol (MCP), enabling seamless integration with large language models. It also features optimized processing for large API documents, including caching, lazy loading, and incremental parsing.

The Docbase MCP Server is a Model Context Protocol (MCP) implementation designed to interact with Docbase, enabling users to search, retrieve, and create posts programmatically. It provides a command-line interface for building and running the server, with configuration options for custom API domains and tokens. This server simplifies Docbase integration into workflows requiring automated post management.

The Chain of Thought MCP Server leverages Groq's API to call LLMs, exposing raw chain-of-thought tokens from Qwen's qwq model. It is designed to enhance AI performance by enabling structured reasoning and verification steps, particularly in complex tool use scenarios. The server integrates seamlessly with MCP configurations, allowing AI agents to iteratively refine responses and improve decision-making processes.

This project provides a lightweight, zero-burden MCP server designed to interact with PostgreSQL databases. It supports CRUD operations, schema management, and automation tools, eliminating the need for Node.js or Python environments. The server includes features like read-only mode, query plan checking, and SSE (Server-Sent Events) support, making it a versatile solution for database integration and automation tasks.