Wan2.1

by Alibaba CloudWan2.1: Alibaba's Open-Source AI Video Generation Model

What is Wan2.1?

Wan2.1 is an open-source AI video generation model developed by Alibaba Cloud, offering powerful visual generation capabilities. It supports text-to-video and image-to-video tasks and includes two model sizes: a 14B-parameter professional version excelling in complex motion generation and physical modeling, and a 1.3B-parameter speed version that runs on consumer-grade GPUs with low VRAM requirements, suitable for secondary development and academic research. Wan2.1 is based on a causal 3D VAE and video Diffusion Transformer architecture, enabling efficient spatiotemporal compression and long-term dependency modeling. The 14B version outperforms models like Sora, Luma, and Pika with a score of 86.22% on the Vbench evaluation, securing the top position. It is open-sourced under the Apache 2.0 license and supports multiple mainstream frameworks, available on GitHub, HuggingFace, and the ModelScope community for easy deployment.

Key Features of Wan2.1

- Text-to-Video: Generates video content based on input text descriptions, supporting long Chinese and English text instructions, accurately restoring scene transitions and character interactions.

- Image-to-Video: Generates video based on images, enabling more controllable creation, suitable for extending static images into dynamic videos.

- Complex Motion Generation: Stably displays complex motions of characters or objects, such as rotation, jumping, and turning, supporting advanced camera control.

- Physical Law Simulation: Accurately restores real-world physical scenes like collisions, rebounds, and cuts, generating videos that conform to physical laws.

- Multi-Style Generation: Supports various video styles and textures, adapting to different creative needs, while supporting different aspect ratios for video output.

- Text Effects Generation: Features Chinese text generation capabilities, supporting Chinese and English text effects, enhancing the visual appeal of videos.

Technical Principles of Wan2.1

- Causal 3D VAE (Variational Autoencoder) Architecture: A self-developed architecture designed for video generation. The encoder compresses input data into latent space representations, and the decoder reconstructs the output. In video generation, 3D VAE handles spatiotemporal information while incorporating causal constraints to ensure coherence and logic in video generation.

- Video Diffusion Transformer Architecture: Based on mainstream video Diffusion (diffusion models) and Transformer architecture. Diffusion models gradually remove noise to generate data, while Transformer captures long-term dependencies using self-attention mechanisms (Attention).

- Model Training and Inference Optimization:

- Training Phase: Uses a combination of DP (Data Parallel) and FSDP (Fully Sharded Data Parallel) distributed strategies to accelerate the training of text and video encoding modules. For the Diffusion module, a hybrid parallel strategy based on DP, FSDP, RingAttention, and Ulysses further enhances training efficiency.

- Inference Phase: Uses CP (Channel Parallel) for distributed acceleration, reducing the latency of generating individual videos. For large models, model partitioning technology further optimizes inference efficiency.

Performance Advantages of Wan2.1

- Outstanding Generation Quality: The 14B-parameter professional version scored 86.22% on the Vbench evaluation, significantly outperforming other models like Sora, Luma, and Pika, securing the top position.

- Support for Consumer-Grade GPUs: The 1.3B-parameter speed version requires only 8.2GB of VRAM to generate 480P videos, compatible with almost all consumer-grade GPUs, generating a 5-second 480P video in about 4 minutes on an RTX 4090.

- Multi-Function Support: Supports tasks like text-to-video, image-to-video, video editing, text-to-image, and video-to-audio, along with visual effects and text rendering capabilities, meeting diverse creative needs.

- Efficient Data Processing and Architecture Optimization: Based on self-developed causal 3D VAE and optimized training strategies, it supports efficient encoding and decoding of videos of any length, significantly reducing inference memory usage and improving training and inference efficiency.

Project Links for Wan2.1

- Official Website: https://wanxai.com

- GitHub Repository: https://github.com/Wan-Video/Wan2.1

- HuggingFace Model Library: https://huggingface.co/Wan-AI

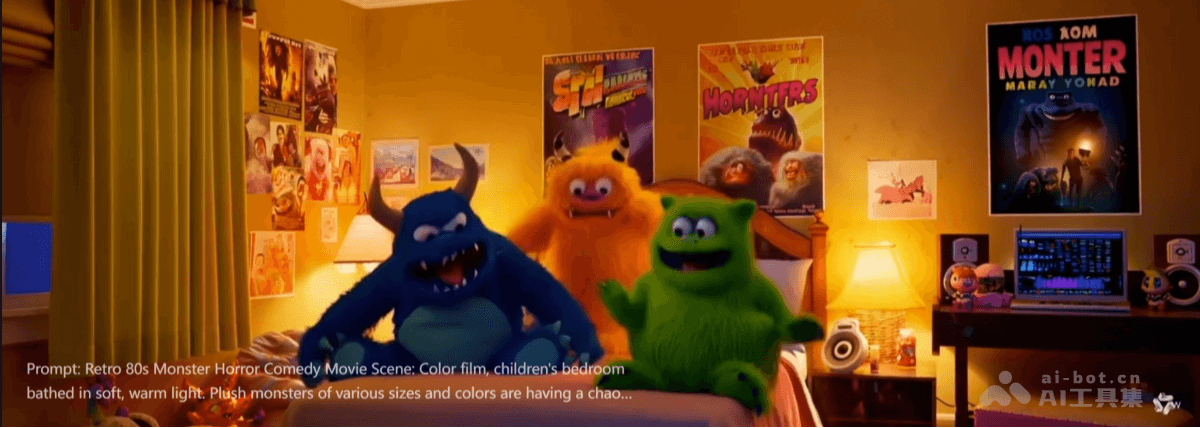

Showcase of Wan2.1

- Complex Motion: Excels in generating realistic videos with extensive body movements, complex rotations, dynamic scene transitions, and smooth camera movements.

- Physical Simulation: Generates videos that accurately simulate real-world physical laws and realistic object interactions.

- Cinema-Level Quality: Provides movie-like visual effects with rich textures and diverse stylistic effects.

- Controlled Editing: Features a general editing model that allows precise editing based on image or video references.

Application Scenarios of Wan2.1

- Film Production and Special Effects: Generates complex action scenes, special effects shots, or virtual character animations, reducing shooting costs and time.

- Advertising and Marketing: Quickly generates creative advertising videos, producing personalized video content based on product features or brand tone.

- Education and Training: Generates educational videos, such as science experiment demonstrations, historical scene recreations, or language learning videos, enhancing the learning experience.

- Game Development: Used to generate in-game animations, cutscenes, or virtual character movements, improving the game's visual effects and immersion.

- Personal Creation and Social Media: Helps creators quickly generate creative videos for social media sharing, Vlog production, or personal project presentations.

Model Capabilities

Usage & Integration

- GPU

- Python 3.8+

Screenshots & Images