LlamaCoder

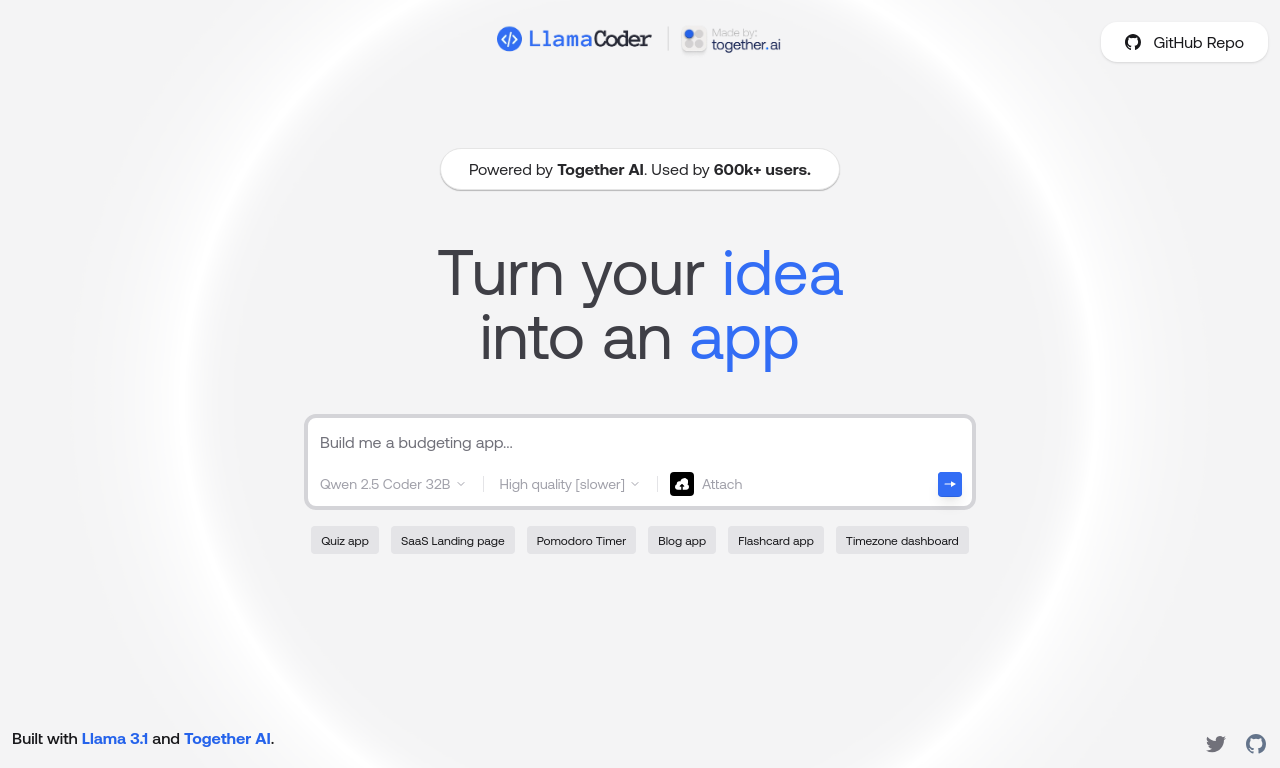

by Together AILlamaCoder is an open-source AI tool that uses the Llama 3.1 405B model to quickly generate full-stack applications, offering an alternative to Claude Artifacts.

LlamaCoder

LlamaCoder is an open-source AI tool that uses the Llama 3.1 405B model to quickly generate full-stack applications. It aims to provide an alternative to Claude Artifacts and integrates components like Sandpack, Next.js, Tailwind, and Helicone to support code sandboxing, application routing, styling, and observability analysis. LlamaCoder allows users to generate components based on requests, making it suitable for building various applications such as calculators, quiz apps, games, and e-commerce product catalogs. It also supports data analysis and PDF analysis, offering local installation and usage guides, making it a powerful tool for developers to efficiently build applications.

Main Features of LlamaCoder

- Code Generation: Generates code based on user prompts using AI technology.

- Application Creation: Quickly creates full-stack applications based on user needs.

- Component Integration: Integrates Sandpack for code sandboxing, Next.js for application routing, Tailwind for styling, and Helicone for observability and analysis.

- Data-Driven: Supports data analysis and processing to help developers better understand and optimize applications.

- Model Support: Based on the Llama 3.1 405B model, providing powerful language understanding and generation capabilities.

Technical Principles of LlamaCoder

- Transformer Architecture: LlamaCoder uses the Transformer architecture, a deep learning model widely used in natural language processing tasks. Transformers process sequence data through self-attention mechanisms, capturing long-range dependencies in text.

- Multi-Layer Transformer Blocks: The model contains multiple Transformer blocks, each further processing and refining text information to enhance the model's understanding of text.

- Multi-Head Attention Mechanism: The model processes information in parallel across different representation subspaces, providing a more comprehensive understanding of text content.

- Feedforward Neural Network: Transformer blocks include feedforward neural networks that non-linearly transform the output of the attention mechanism, increasing the model's expressive power.

- BPE Tokenization: Uses the Byte Pair Encoding (BPE) algorithm for text tokenization, an efficient vocabulary encoding method that handles unknown words and reduces vocabulary size.

LlamaCoder Project Links

- Project Website: llamacoder.together.ai

- GitHub Repository: https://github.com/Nutlope/llamacoder

Application Scenarios of LlamaCoder

- Rapid Prototyping: Developers use LlamaCoder to quickly generate application prototypes, helping to test and validate ideas in the early stages.

- Education and Learning: Students and developers learn how to build applications with LlamaCoder without needing in-depth knowledge of coding complexities.

- Automating Coding Tasks: LlamaCoder automates some coding tasks, reducing developers' workload and allowing them to focus on more complex development issues.

- Multi-Language Support: LlamaCoder supports multiple programming languages, helping developers work on projects across different languages.

- Local Deployment: LlamaCoder supports local deployment, allowing developers to run it on their own hardware rather than relying on cloud services.

Features & Capabilities

What You Can Do

Code Generation

Full-Stack Application Development

Data Analysis

Pdf Analysis

Categories

AI

Full-Stack Development

Open Source

Code Generation

Next.js

Tailwind

Helicone

Sandpack

Data Analysis

PDF Analysis

Example Uses

- Rapid Prototyping

- Education and Learning

- Automating Coding Tasks

- Multi-Language Support

- Local Deployment

Getting Started

Screenshots & Images

Primary Screenshot

Additional Images

Stats

292

Views

0

Favorites