MIDI

by VASTMIDI is an advanced 3D scene generation technology that transforms a single image into a high-fidelity 360-degree 3D scene in a short time.

What is MIDI?

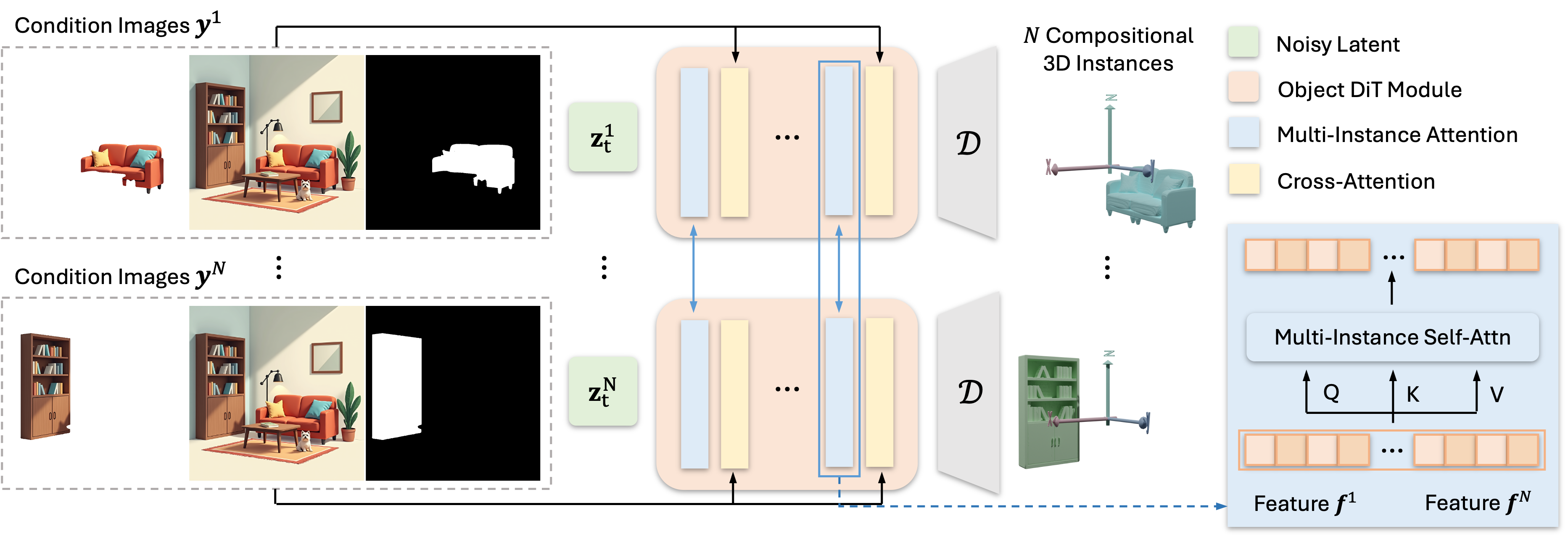

MIDI (Multi-Instance Diffusion for Single Image to 3D Scene Generation) is an advanced 3D scene generation technology that can quickly transform a single image into a high-fidelity 360-degree 3D scene. By intelligently segmenting the input image, it identifies independent elements in the scene and generates a 360-degree 3D scene based on a multi-instance diffusion model combined with an attention mechanism. It has strong global perception and detail representation capabilities, completing generation in 40 seconds, and has good generalization ability for images of different styles.

Main Features of MIDI

- 2D Image to 3D Scene: Converts a single 2D image into a 360-degree 3D scene, providing users with an immersive experience.

- Multi-Instance Synchronous Diffusion: Simultaneously models multiple objects in the scene, avoiding the complex process of generating and combining them one by one.

- Intelligent Segmentation and Recognition: Intelligently segments the input image to accurately identify various independent elements in the scene.

Technical Principles of MIDI

- Intelligent Segmentation: MIDI first intelligently segments the input single image, accurately identifying various independent elements in the scene (such as tables, chairs, coffee cups, etc.). These "decomposed" image parts, along with the overall scene environment information, become the basis for 3D scene construction.

- Multi-Instance Synchronous Diffusion: Unlike other methods that generate 3D objects one by one and then combine them, MIDI uses a multi-instance synchronous diffusion approach. It can simultaneously model multiple objects in the scene, similar to an orchestra playing different instruments at the same time, eventually converging into a harmonious movement. This avoids the complex process of generating and combining them one by one, greatly improving efficiency.

- Multi-Instance Attention Mechanism: MIDI introduces a novel multi-instance attention mechanism that effectively captures the interactions and spatial relationships between objects. This ensures that the generated 3D scene not only includes independent objects but also their placement and mutual influence are logical and integrated.

- Global Perception and Detail Fusion: MIDI introduces multi-instance attention layers and cross-attention layers to fully understand the contextual information of the global scene, integrating it into the generation process of each independent 3D object. This ensures the overall coordination of the scene and enriches the details.

- Efficient Training and Generalization Ability: During training, MIDI uses limited scene-level data to supervise the interactions between 3D instances, combined with a large amount of single-object data for regularization.

- Texture Detail Optimization: The texture details of the 3D scenes generated by MIDI are very impressive. Based on the application of technologies such as MV-Adapter, the final 3D scenes look more realistic and credible.

Project Addresses of MIDI

- Project Website: https://huanngzh.github.io/MIDI-Page/

- Github Repository: https://github.com/VAST-AI-Research/MIDI-3D

- HuggingFace Model Library: https://huggingface.co/VAST-AI/MIDI-3D

- arXiv Technical Paper: https://arxiv.org/pdf/2412.03558

Application Scenarios of MIDI

- Game Development: Quickly generate 3D scenes for games, reducing development costs.

- Virtual Reality: Provide users with immersive 3D experiences.

- Interior Design: Quickly generate 3D models by taking indoor photos, facilitating design and display.

- Cultural Heritage Digital Preservation: Create 3D models of cultural relics for research and display.

Features & Capabilities

What You Can Do

3d Scene Generation

Image To 3d Conversion

Scene Segmentation

Texture Optimization

Categories

3D Scene Generation

AI

Image Processing

3D Modeling

Virtual Reality

Game Development

Interior Design

Cultural Heritage

Diffusion Models

Multi-Instance Diffusion

Example Uses

- Game Development

- Virtual Reality

- Interior Design

- Cultural Heritage Digital Preservation

Getting Started

Screenshots & Images

Primary Screenshot

Additional Images

Stats

89

Views

0

Favorites