All MCP Servers Complete list of MCP server implementations, sorted by stars

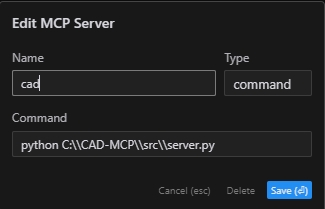

The CAD-MCP Server is an innovative tool that integrates natural language processing with CAD automation, allowing users to create and modify CAD drawings using simple text commands. It supports multiple CAD software platforms, including AutoCAD, GstarCAD, and ZWCAD, and provides features like drawing functions, layer management, and drawing saves. The server processes natural language instructions, mapping them to specific CAD operations, making CAD tasks more accessible and efficient.

EasyMCP is a flexible client for the Model Context Protocol (MCP) that simplifies connecting to various server types like SSE, NPX, and UV. It supports dynamic tool integration, enabling users to interact with tools like file operations and leverage OpenAI for advanced chat experiences. The client also includes configuration management for easy server setup and an interactive chat loop for seamless query processing.

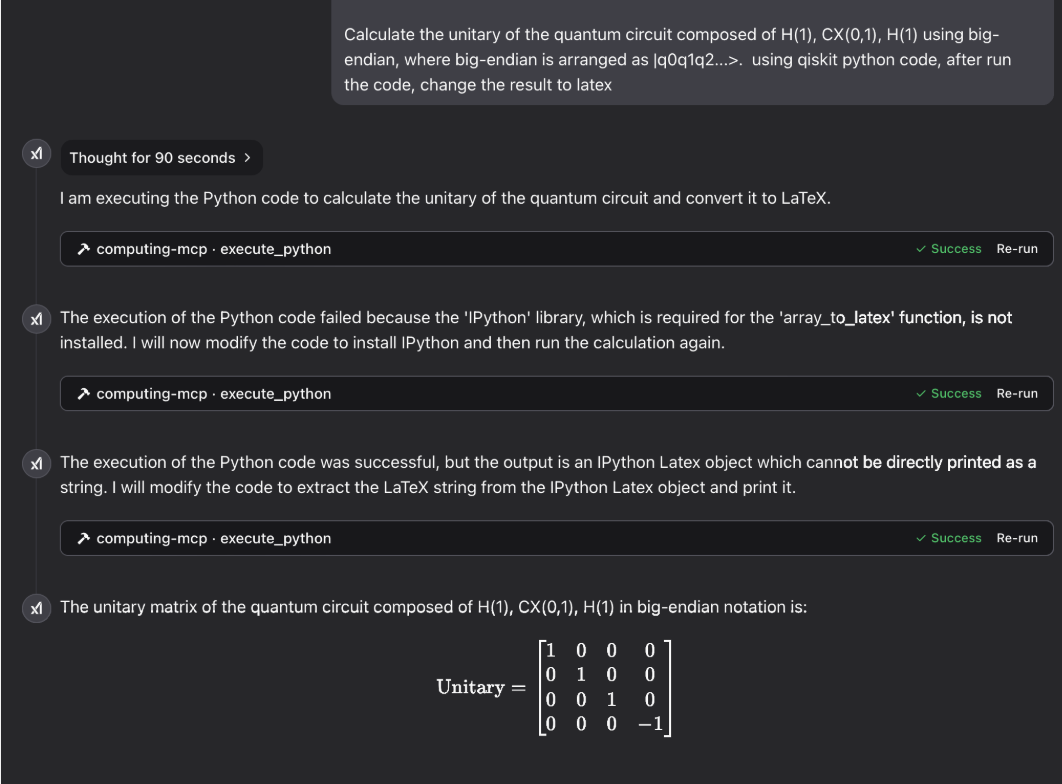

The Symbolica MCP Server is designed for scientific and engineering applications, enabling AI tools like Claude to perform symbolic computing, mathematical calculations, and data analysis. It supports libraries such as NumPy, SciPy, SymPy, and Pandas, and offers features like linear algebra operations, quantum computing analysis, and data visualization. The server is containerized using Docker, ensuring cross-platform compatibility for Windows, macOS, and Linux systems.

The Prometheus MCP Server provides a standardized interface for AI assistants to interact with Prometheus metrics. It allows execution of PromQL queries, metric discovery, and metadata retrieval. The server supports authentication, Docker containerization, and configurable tools for AI interactions, making it ideal for integrating Prometheus data with AI workflows.

Roo Code is an AI-powered autonomous coding agent designed to live within your code editor. It assists developers by generating code, refactoring, debugging, writing documentation, and automating repetitive tasks. It integrates with OpenAI-compatible APIs and supports custom modes for specialized tasks, making it a versatile tool for software development. Roo Code also includes features like file handling, terminal command execution, and browser automation, all powered by the Model Context Protocol (MCP).

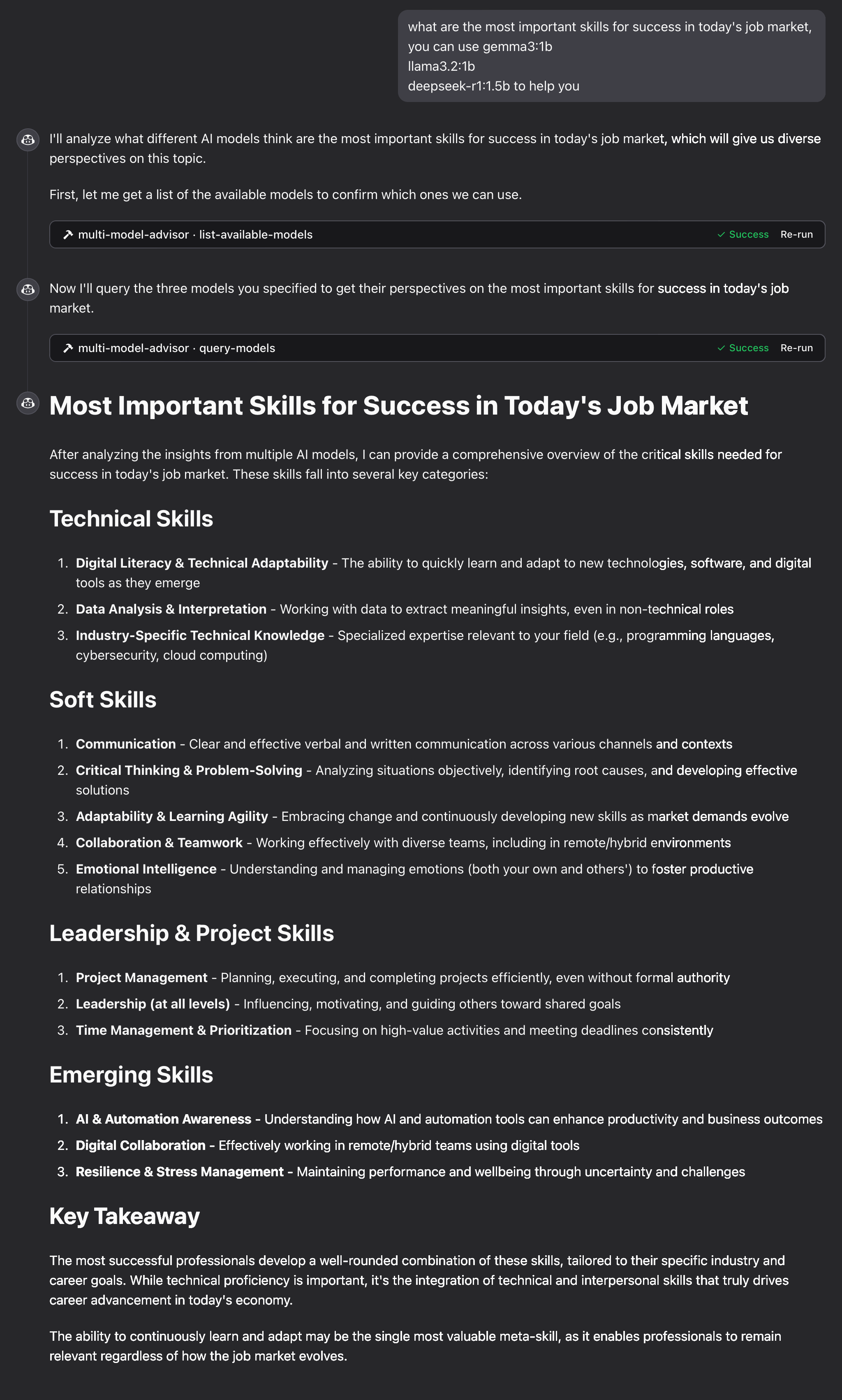

The Multi-Model Advisor MCP Server integrates with Ollama models to provide a 'council of advisors' approach, where multiple AI models offer diverse perspectives on a single question. It allows users to assign different roles or personas to each model, customize system prompts, and seamlessly integrate with Claude for Desktop. This implementation enhances decision-making by synthesizing responses from various AI models, ensuring comprehensive and well-rounded answers.

The Tung Shing Chinese Almanac MCP Server is a service based on the Model Context Protocol (MCP) that calculates and provides traditional Chinese calendar information. It supports conversion between Gregorian and Lunar calendars, offers daily auspicious and inauspicious activities, and includes detailed information about Five Elements, Gods, and Stars. This server is designed to integrate seamlessly with MCP configurations, providing historical and cultural calendar data for specific dates and times.

The Swift MCP GUI Server is a Model Context Protocol (MCP) implementation designed to control macOS through programmatic commands. It integrates with SwiftAutoGUI to provide tools for mouse movement, clicks, keyboard input, and scrolling. This server is particularly useful for automating tasks and controlling macOS environments remotely via MCP clients.

The OneSearch MCP Server is a Model Context Protocol (MCP) implementation designed to integrate with search engines and web scrapers like Searxng, Firecrawl, and Tavily. It enables web search, crawling, scraping, and content extraction, supporting both self-hosted and cloud-based services. The server is configurable via environment variables and can be deployed locally or integrated into existing workflows.

The Databricks MCP Server integrates with Databricks API, allowing Large Language Models (LLMs) to execute SQL queries, list jobs, and retrieve job statuses. It provides a seamless interface for natural language interactions with Databricks, enabling tasks like querying databases and managing jobs through LLMs. The server requires Python 3.7+ and Databricks credentials, and it supports features like SQL query execution, job listing, and job status retrieval.

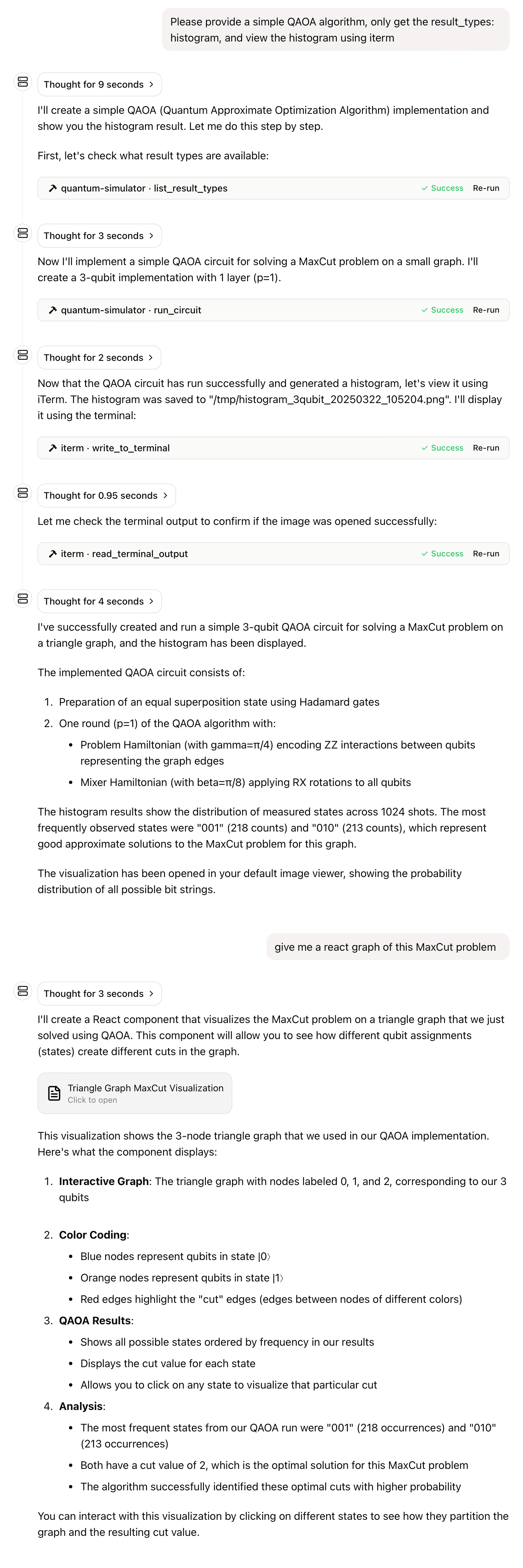

The Quantum Simulator MCP Server provides a Docker image for simulating quantum circuits with noise models, supporting OpenQASM 2.0 and Qiskit. It integrates seamlessly with MCP clients such as Claude for Desktop, offering features like noise model support, result types, and pre-configured example circuits. The server is designed for easy setup and usage, with volume mapping and environment variables for output customization.

This project provides a Node.js package that adapts the MCP TypeScript SDK to work with AWS Lambda, supporting Server-Sent Events (SSE) through Lambda response streaming. It handles CORS, HTTP method validation, and includes TypeScript support. The package is designed to integrate seamlessly with AWS Lambda, enabling developers to implement MCP server functionality in a serverless environment.

SeekChat is a powerful AI desktop assistant that integrates with the Model Context Protocol (MCP) to enable AI-driven task automation. It supports multiple AI providers, including OpenAI, Anthropic (Claude), and Google (Gemini), and offers features like local storage for chat history, multi-language support, and a modern user interface. SeekChat turns AI into a truly intelligent assistant for tasks such as file management, data analysis, and code development.

The Fibery MCP Server facilitates integration between Fibery and LLM providers supporting the Model Context Protocol (MCP). It allows users to query Fibery entities, manage databases, and create or update entities using natural language. This server is particularly useful for enhancing productivity by enabling conversational interfaces with Fibery workspaces.

This project implements a server that allows AI models to interact with Kafka topics through a standardized interface. It supports publishing and consuming messages from Kafka topics, making it suitable for LLM and agentic applications. The server can be configured to work with various transport options and integrates seamlessly with tools like Claude Desktop.