All MCP Servers Complete list of MCP server implementations, sorted by stars

The Perplexity MCP Server acts as a bridge between AI assistants (such as Claude Code and Claude Desktop) and the Perplexity API, providing enhanced search and reasoning capabilities. It allows AI assistants to retrieve real-time information from the web using Perplexity's Sonar Pro model and perform complex reasoning tasks with the Sonar Reasoning Pro model. This integration ensures a seamless experience for users by enabling direct access to these features within the AI assistant's interface.

The Onyx MCP Server enables seamless integration between MCP-compatible clients and Onyx AI knowledge bases. It provides enhanced semantic search, context window retrieval, and chat integration, allowing users to search and retrieve relevant context from documents. The server supports configurable document set filtering, full document retrieval, and chat sessions for comprehensive answers.

MCP RSS is a server implementation of the Model Context Protocol (MCP) designed to interact with RSS feeds. It allows users to parse OPML files to import RSS feed subscriptions, automatically fetch and update articles, and expose RSS content through an MCP API. Features include marking articles as favorites, filtering by source and status, and integration with MySQL for data storage. It is built using TypeScript and JavaScript, and can be configured via environment variables.

This repository provides a sample implementation of an MCP server, designed for debugging in Visual Studio Code. It includes examples in both Python and TypeScript, allowing developers to test and debug MCP tools directly in their browser using the MCP Inspector. The project is ideal for those looking to understand and experiment with MCP server development in a controlled, debuggable environment.

This project provides a Zed extension for the Axiom MCP server, allowing users to configure and integrate Axiom's API within Zed. It includes support for setting up API tokens and configuring the server via a `config.txt` file, enhancing Zed's functionality with Axiom's capabilities.

This MCP server provides seamless integration between Turso databases and Large Language Models (LLMs). It features a two-level authentication system that handles both organization-level and database-level operations, enabling efficient management and querying of Turso databases directly from LLMs. The server supports various operations, including database creation, deletion, and vector search, making it a powerful tool for managing database interactions in AI workflows.

This MCP server integrates with Cursor to activate Claude's explicit thinking mode, allowing users to see detailed reasoning processes for their queries. It uses the Model Context Protocol to intercept and format queries with special tags, triggering Claude's reasoning mode. The tool is designed for developers who want to understand Claude's thought process in problem-solving, mathematical proofs, and code analysis.

The Ollama MCP Client is designed to work with various language models such as Qwen, Llama 3, Mistral, and Gemini, served via Ollama. It supports real-time streaming of LLM responses and integrates seamlessly with the Database MCP Server, enabling natural language interactions with databases. This client is ideal for developers looking to leverage the power of language models for database operations and natural language queries.

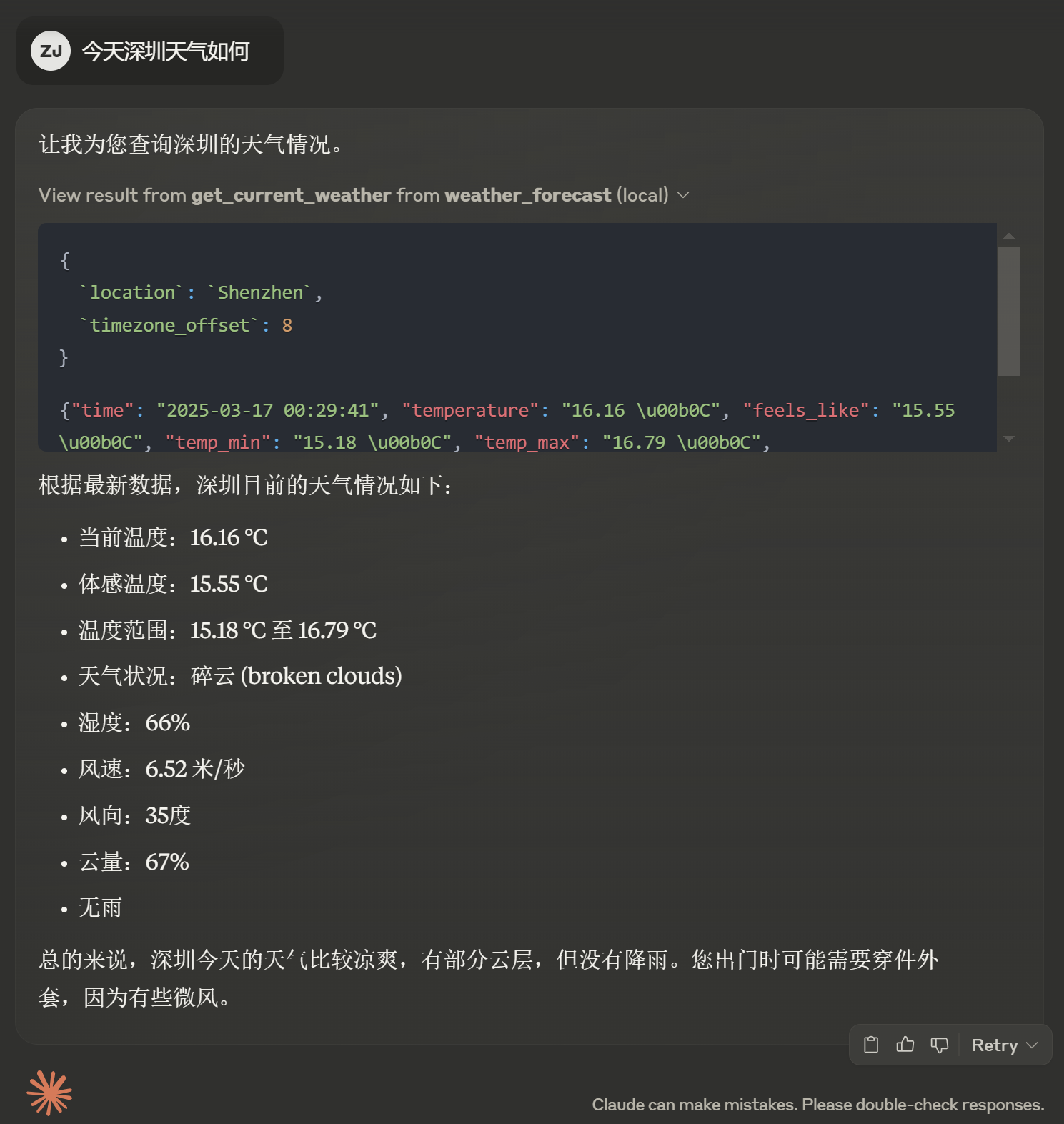

The OpenWeather MCP Server is a Model Control Protocol (MCP) server designed to provide global weather forecasts and current weather conditions. It integrates with the OpenWeatherMap API to fetch detailed weather information, including temperature, humidity, wind speed, and more. The server supports querying weather conditions anywhere in the world and can be easily configured using environment variables or direct API key parameters. It is designed to work seamlessly with MCP-supported clients, making it a versatile tool for weather-related applications.

This MCP server provides a bridge between AI agents and the Brex financial platform, allowing agents to retrieve account information, access expense data, manage budget resources, and view team information. It implements standardized resource handlers and tools following the MCP specification, enabling secure and efficient access to financial data. The server supports features like receipt management, expense tracking, and budget monitoring, making it a powerful tool for AI-driven financial operations.

The Claude Kubernetes MCP Server is a Go-based implementation designed to orchestrate Kubernetes workloads using Claude AI, ArgoCD, GitLab, and Vault. It provides a REST API for programmatic interaction with these systems, enabling advanced automation and control of Kubernetes environments. The server supports local development, Docker deployment, and production-ready Helm chart deployment for Kubernetes clusters.

The MCP PostgreSQL Server provides a standardized interface for AI models to perform database operations on PostgreSQL. It supports secure connections, prepared statements, and comprehensive error handling, making it easier for AI models to query and manage PostgreSQL databases. Features include automatic connection management, support for PostgreSQL-specific syntax, and TypeScript integration.

The B12 MCP Server is designed to facilitate the generation of websites using AI, implementing the model context protocol to streamline the process. It provides a robust framework for integrating AI-driven web design, enabling efficient and scalable website creation. This server is particularly useful for automating and enhancing the web development workflow.

This MCP server integrates with Google Drive and Google Sheets, enabling users to create, modify, and manage spreadsheets programmatically. It supports both service account and OAuth 2.0 authentication methods, making it suitable for both automated and interactive use cases. The server provides tools for retrieving sheet data, updating cells, batch updates, listing sheets, and creating new spreadsheets or sheets.

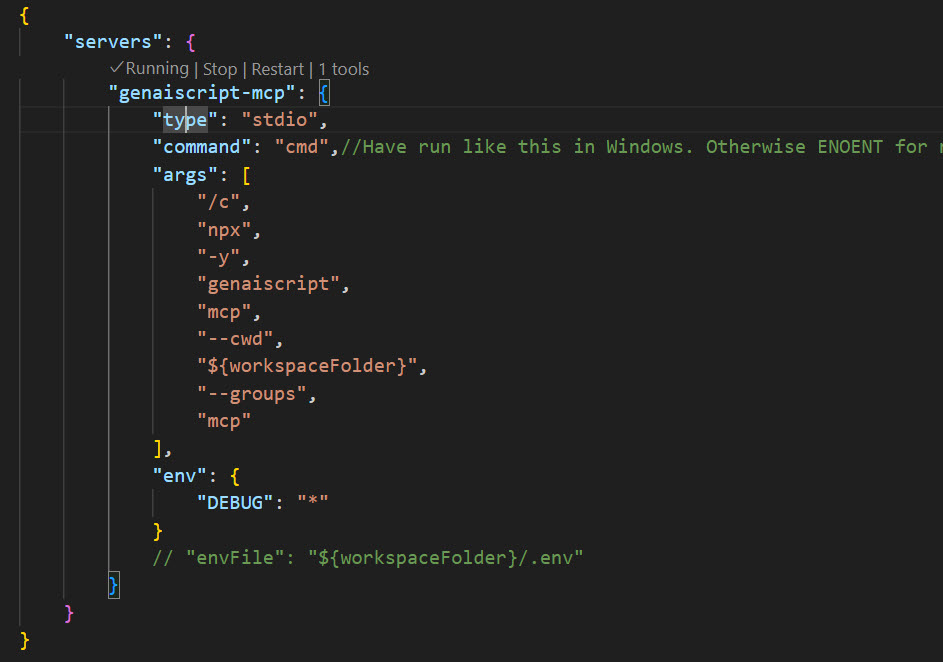

This repository showcases the MCP Server functionalities of GenAIScript, a framework designed to facilitate communication with AI models, including local ones. It provides a standardized way to connect AI models to various data sources and tools, similar to a USB-C port for AI applications. The demo includes configuration examples for setting up the MCP server in VSCode and integrating it with GitHub Copilot.

This project provides a Springboot-based template for developing MCP servers, supporting both STDIO and Server-Sent Events (SSE) modes. It includes JUnit for unit testing and offers flexibility in configuring message and SSE endpoints. The template is designed to streamline the development of MCP-compliant servers, making it easier to integrate with various AI tools and services.

The LND MCP Server connects to Lightning Network nodes, allowing users to query channel information and other node data using natural language. It provides structured JSON responses alongside human-readable answers, integrates with MCP-supporting LLMs, and supports secure connections via TLS certificates and macaroons. It also includes a mock mode for development without a real LND node.

The Pensieve MCP Server is a TypeScript-based implementation of a RAG (Retrieval-Augmented Generation) knowledge management system. It allows users to store and query knowledge using natural language, with LLM-powered analysis and response synthesis. Features include memory-based URIs, markdown file storage, and tools like `ask_pensieve` for contextual answers based on stored knowledge.