All MCP Servers Complete list of MCP server implementations, sorted by stars

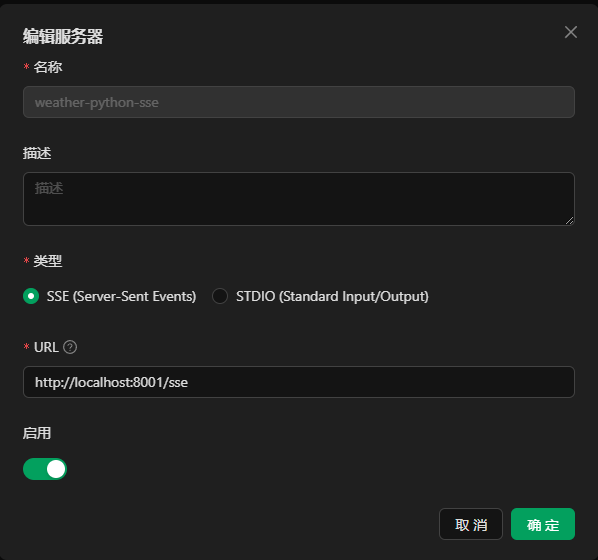

This project is a Model Context Protocol (MCP) server designed to provide real-time weather information. It integrates with the Hefeng Weather API to fetch detailed weather data, including temperature, humidity, wind speed, and precipitation. The server can be deployed using Docker and is compatible with Python 3.13.2, making it easy to set up and use in various environments.

MCPilled is a curated collection of news and updates about MCP servers, clients, protocol developments, and related events. It provides a centralized resource for tracking important milestones in the rapid evolution of the Model Context Protocol. The project also integrates tools like Supabase and OpenAI for enhanced functionality, such as vector search and article management.

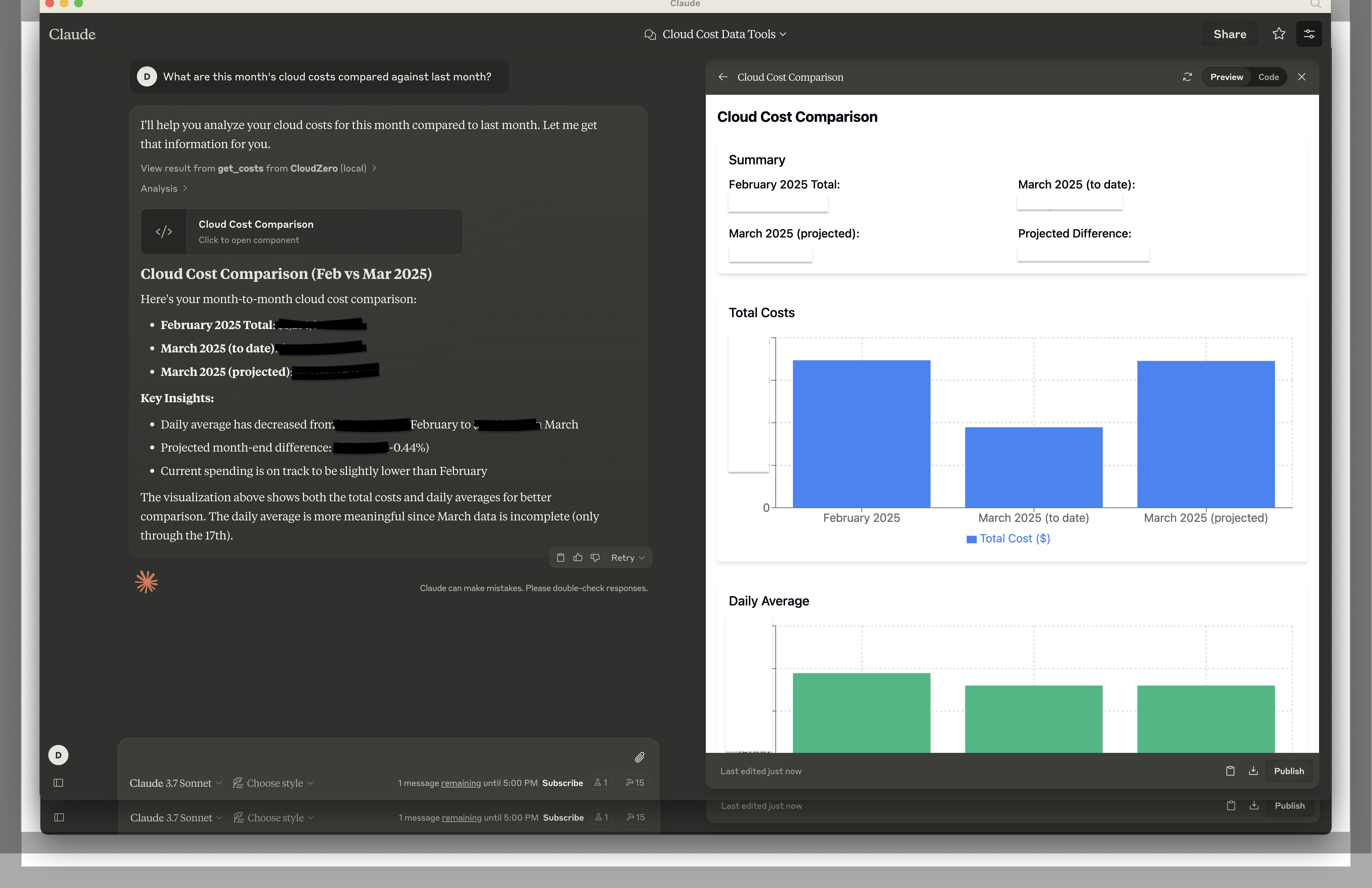

This MCP server enables Claude Desktop to interact with CloudZero API, allowing users to query and analyze cloud cost data directly from the interface. It implements tools like `get_costs`, `get_dimensions`, `list_budgets`, and `list_insights` for seamless integration. The server uses JSON-RPC 2.0 for communication and can be configured to run as a background process in Claude Desktop.

This MCP server enables AI assistants to generate and edit images using Google's Gemini Flash models. It supports text-to-image generation, image transformation based on prompts, intelligent filename generation, and local image storage. The server is designed to work seamlessly with MCP clients like Claude Desktop, providing high-resolution image output and strict text exclusion features.

The CheerLights MCP Server is a Model Context Protocol (MCP) implementation that allows AI tools such as Claude to interact with the CheerLights API. CheerLights is a global IoT project that synchronizes colors across connected lights worldwide. This server provides features like fetching the current CheerLights color, viewing recent color change history, and real-time API integration. It is designed to work seamlessly with Claude for Desktop, enabling users to query CheerLights data directly through AI interactions.

The medRxiv MCP Server bridges AI assistants with medRxiv's preprint repository using the Model Context Protocol (MCP). It allows AI models to search for health sciences preprints, access detailed metadata, and retrieve paper content programmatically. Core features include paper search, efficient retrieval, metadata access, and research support, making it a valuable tool for health sciences research and analysis.

This Python template provides a streamlined foundation for building Model Context Protocol (MCP) servers, making AI-assisted development of MCP tools easier and more efficient. It includes features like configurable transport modes, example service integrations, and embedded MCP documentation to enhance AI tool understanding. The template is designed to help developers quickly create and deploy MCP tools with minimal setup.

This MCP server integrates TinyPNG's image compression capabilities, allowing users to compress local and remote images efficiently. It supports various image formats and can be run using `bun` or `node`, making it a versatile tool for developers working with image optimization.

This project implements a Model Context Protocol (MCP) server specifically designed for Scikit-learn. It offers a standardized interface to interact with Scikit-learn models and datasets, supporting features like model training, evaluation, data preprocessing, model persistence, and hyperparameter tuning. The server simplifies the integration and management of Scikit-learn workflows in a structured and scalable manner.

The Gradle MCP Server facilitates interaction between AI tools and Gradle projects by implementing the Model Context Protocol (MCP). It leverages the Gradle Tooling API to provide features such as project metadata retrieval, remote task execution, and test running. This server supports multiple modes, including stdio and Server-Sent Events (SSE), making it versatile for various use cases.

This project extends NextChat by adding functionality to create and deploy MCP (Model Context Protocol) servers through chat interactions. It integrates with OpenRouter to access a wide range of LLM models, offering features like tool extraction, one-click deployment, and integration guides for various AI systems. The project simplifies the process of building and deploying MCP servers, making it accessible directly from chat interfaces.

PHPocalypse-MCP is an MCP server tailored for developers who prioritize efficiency and productivity. It automates the execution of tests and static analysis, allowing developers to focus on coding without the overhead of manual testing. The server integrates seamlessly with PHP projects, enabling developers to define and run tools like php-cs-fixer, php-stan, and unit tests through a simple YAML configuration. It is particularly useful for developers using TypeScript and Node.js, providing a proof-of-concept solution to enhance development workflows.

The Raindrop.io MCP Server enables seamless integration of Raindrop.io bookmark management with LLM applications such as Claude and Cursor. It allows users to add bookmarks, fetch latest bookmarks, and search bookmarks by tags or queries. Built with Python, it simplifies bookmark management within LLM workflows.

This project implements an MCP-based AI infrastructure that facilitates real-time tool execution and structured knowledge retrieval for AI systems such as Claude and Cursor. It extends the capabilities of an MCP server by integrating custom tools for fetching external data and enhancing AI tool execution. The architecture supports dynamic agentic interactions, making it a versatile solution for AI integration and extensibility.

OmniLLM is a Model Context Protocol (MCP) server designed to allow Claude to access and integrate responses from various large language models (LLMs) including ChatGPT, Azure OpenAI, and Google Gemini. It provides a unified interface for querying and comparing responses from these LLMs, enhancing Claude's capabilities with multi-model insights. Features include querying multiple LLMs, checking service availability, and seamless integration with Claude Desktop.

This project provides a minimal implementation of a Model Context Protocol (MCP) server tailored for Cursor IDE. It uses the official `@modelcontextprotocol/sdk` package and requires no external configuration, making it ideal for bootstrapping, forking, or experimentation. The server is lightweight, with a single file implementation, and is designed to be easy to set up and run.

This MCP server provides a comprehensive interface for accessing football data, including league standings, team information, player statistics, and live match events. Built using the Model Context Protocol (MCP), it integrates with the API-Football service via RapidAPI, offering tools for developers to retrieve and analyze football data efficiently. The server supports Docker deployment and is designed for seamless integration with applications like Claude Desktop.