All MCP Servers Complete list of MCP server implementations, sorted by stars

This project is a proof-of-concept MCP server designed to integrate with Mythic, allowing large language models (LLMs) to automate pentesting tasks. It provides a framework for LLMs to emulate specific threat actors and execute objectives using documented techniques. The server is compatible with Claude Desktop and other MCP clients, offering a quick and flexible way to leverage LLMs for security testing.

This MCP server acts as a bridge between Large Language Models (LLMs) and iOS simulators, allowing users to control simulators through natural language commands. It supports a wide range of operations, including simulator session management, app installation, UI interaction, debugging, and advanced features like location simulation and media injection. The server can be integrated directly with MCP or used as a standalone library for iOS simulator automation.

The Elasticsearch MCP Server connects agents to Elasticsearch data through the Model Context Protocol, allowing users to interact with indices via natural language conversations. It supports listing indices, fetching mappings, and executing Elasticsearch queries with full Query DSL capabilities. This server integrates seamlessly with MCP Clients like Claude Desktop, providing a user-friendly interface for querying and analyzing Elasticsearch data.

This project implements a FastMCP server that extends the capabilities of Large Language Models (LLMs) like Claude Desktop by providing tools for local file system interaction and command execution. It follows the Model Context Protocol (MCP), a standardized method for integrating AI models with various data sources and tools. The server offers features such as executing shell commands, viewing and editing files, and searching for patterns in files, all in a controlled and secure manner.

The Spotify MCP Server integrates with Spotify's API to allow AI assistants such as Claude and Cursor to control music playback, search for tracks, and manage playlists. It provides a set of tools for read and write operations, enabling seamless interaction with Spotify through AI-powered commands. This server is designed to enhance the capabilities of AI assistants by adding music control functionality.

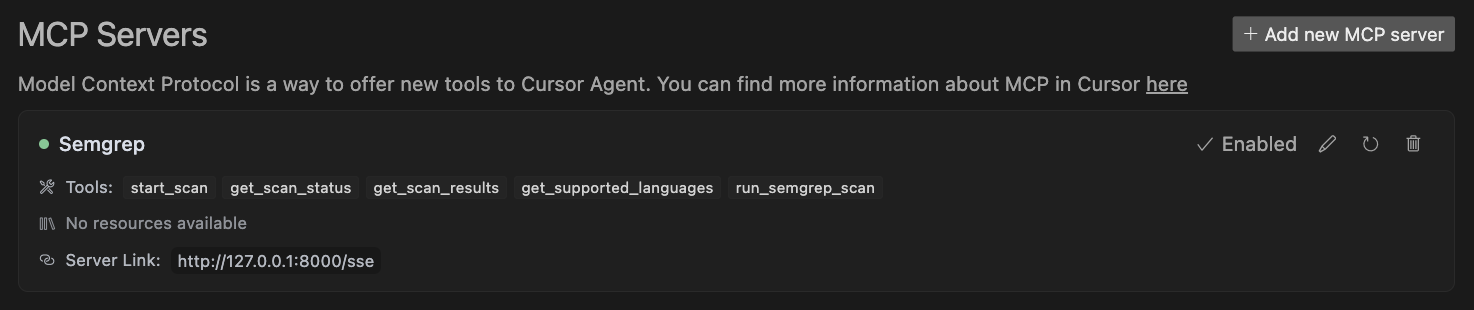

The Semgrep MCP Server provides a comprehensive interface to Semgrep through the Model Context Protocol, enabling code scanning for security vulnerabilities, custom rule creation, and result analysis. It supports advanced features like directory scanning, result filtering, and export in various formats, making it a powerful tool for developers and security analysts.

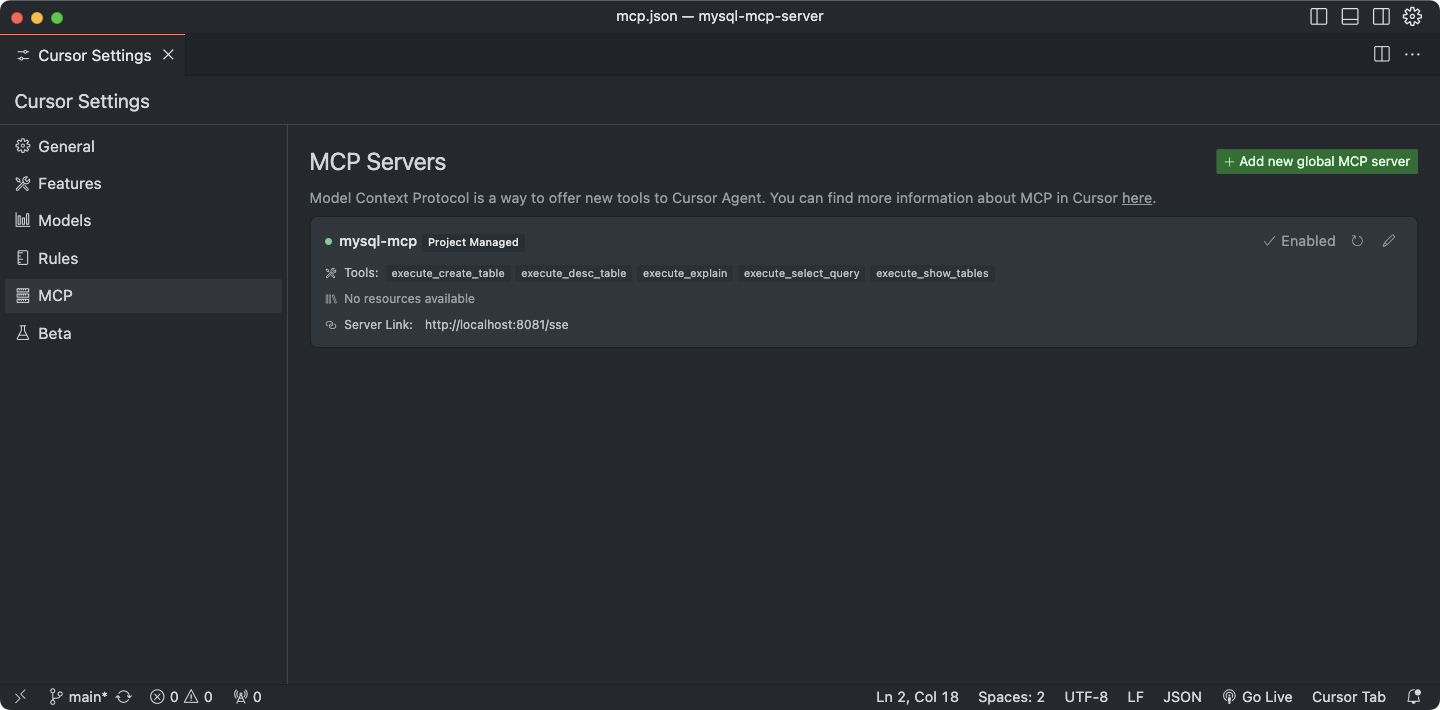

This project is a server application designed on top of the Model Context Protocol (MCP) to facilitate interactions between AI models and MySQL databases. It provides a suite of tools for executing database operations such as query execution, table creation, and schema inspection. The server is containerized with Docker and Docker Compose, making it easy to deploy and scale. It supports Python-based executors and integrates seamlessly with AI models through the MCP framework.

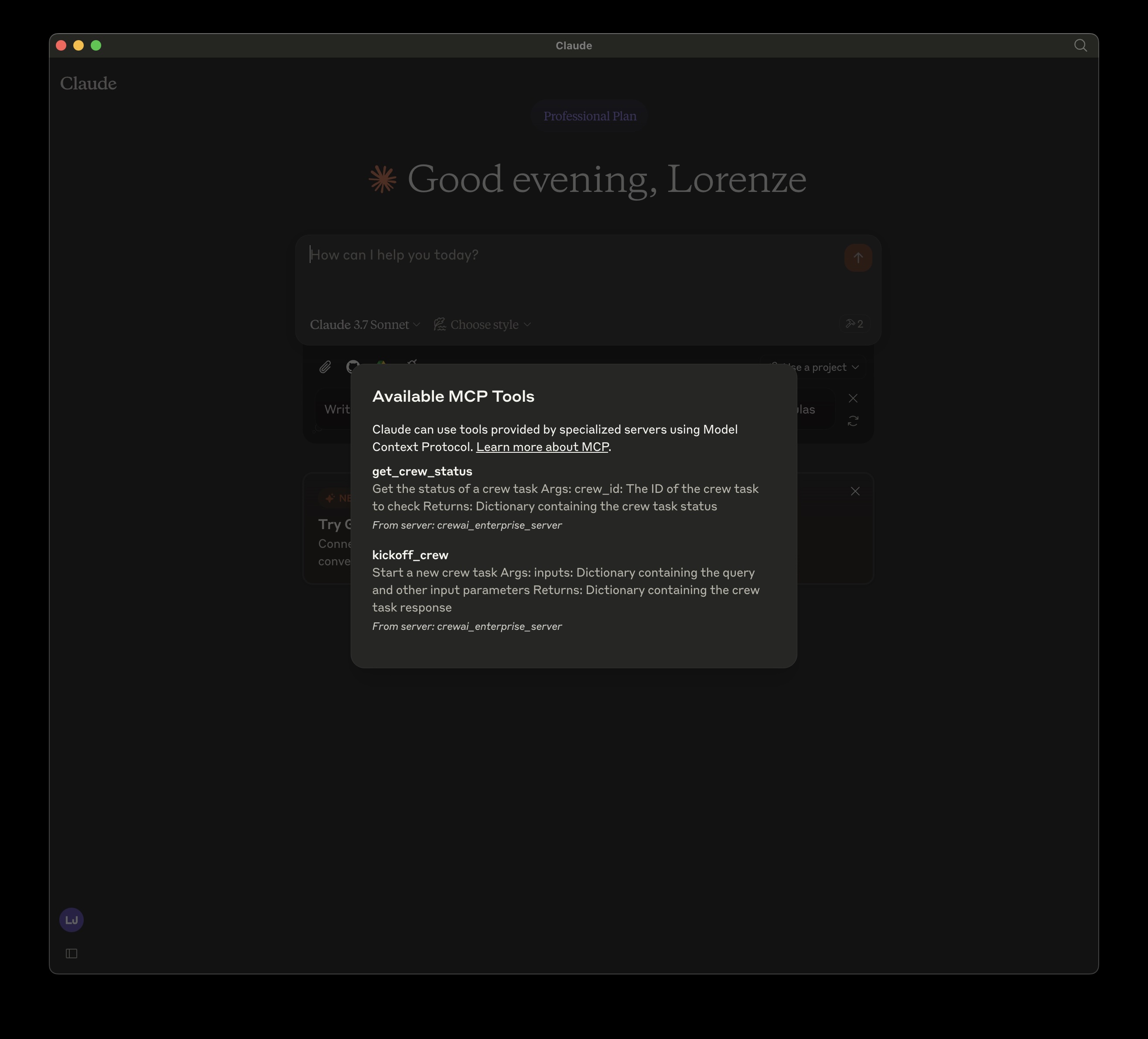

The CrewAI Enterprise MCP Server is a Model Context Protocol (MCP) implementation designed to manage and monitor deployed CrewAI workflows. It provides functionalities to kick off crew deployments and inspect their status, ensuring efficient workflow management. This server integrates with tools like Claude Desktop for seamless operation and monitoring.

GIMP-MCP is a server implementation that connects the GNU Image Manipulation Program (GIMP) with AI models using the Model Context Protocol (MCP). It allows users to perform advanced image editing tasks such as background removal, inpainting, and style transfer directly within GIMP. This integration enhances productivity by automating repetitive tasks and enabling context-aware image manipulations.

The PowerShell Execution MCP Server is designed to accept PowerShell scripts as strings, execute them securely in an MCP Server environment, and return the results in real-time. This server facilitates the integration of PowerShell with AI assistants, allowing them to understand and execute PowerShell commands efficiently. It supports integration with tools like GitHub Copilot in VSCode Insiders, making it a versatile solution for developers and AI applications.

The Nostr MCP Server is a Model Context Protocol (MCP) implementation designed to integrate Claude, a large language model, with the Nostr network. It provides tools for fetching user profiles, text notes, long-form content, and zaps (payments) from Nostr. The server supports both hex and npub formats, handles NIP-19 encoding/decoding, and includes advanced features like zap receipt validation and smart caching. It is specifically tailored for use with Claude for Desktop, enabling seamless interaction with Nostr data directly within Claude.

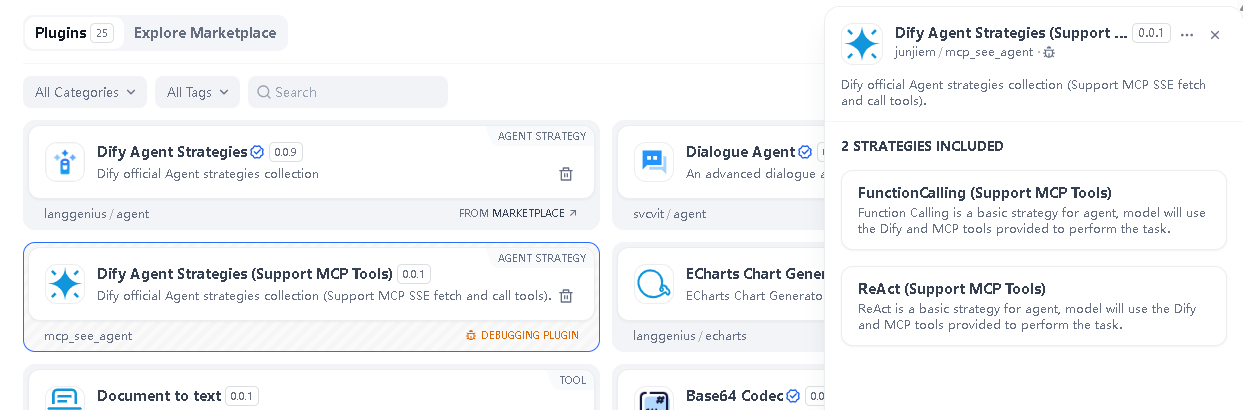

This plugin enables Dify 1.0 to support MCP SSE tools and agent strategies, allowing for the discovery and invocation of various tools. It includes configurations for multiple MCP services and provides examples of managed MCP servers. The plugin can be installed via GitHub and is designed to enhance the functionality of the Dify platform.

The Home Assistant MCP Server facilitates seamless integration between AI assistants, such as Claude, and Home Assistant. It allows AI tools to query device states, control entities, troubleshoot automations, and provide summaries of smart home setups. The server supports guided conversations for common tasks, smart search for entities, and token-efficient JSON responses. It is designed for easy setup with Docker and Python, making it a powerful tool for enhancing AI-driven smart home management.

This MCP server facilitates integration with LinkedIn's Community Management API, allowing users to retrieve logged-in user information and create posts. It supports local or remote hosting using HTTP+SSE transport and implements the draft Third-Party Authorization Flow for LinkedIn's OAuth authorization. The server is designed for developers looking to extend LinkedIn's capabilities within the MCP framework.

This MCP server integrates Claude with Vercel's REST API, providing tools for deployment monitoring, environment variable retrieval, and project management. It supports features like deployment creation, project management, and team operations, making it easier to manage Vercel deployments programmatically. The server is built with TypeScript and supports Docker for easy deployment.

This MCP server facilitates interaction with LinkedIn's Community Management API, allowing users to retrieve logged-in user information and create posts. It supports local or remote hosting using HTTP+SSE transport and implements the draft Third-Party Authorization Flow from MCP specs for OAuth delegation. It includes tools for user info retrieval and post creation, and can be debugged using the MCP Inspector.

The Enterprise MCP Server, or mcp-ectors, is designed to bridge the gap between large language models (LLMs) and various tools, resources, and workflow prompts. Built with Rust and WebAssembly (Wasm), it leverages an actor-based architecture for high scalability, security, and performance. It supports multiple MCP routers in a containerized environment, enabling dynamic and efficient resource management. Ideal for enterprise applications, it simplifies the integration of LLMs with external capabilities, making it a powerful tool for agentic AI workflows.